CES 2023: Exwayz to showcase the 1st plug and play software that will guide 100 million autonomous robots

Exwayz, pioneer in plug and play 3D spatial intelligence software, today unveils Exwayz SLAM, its new generation software that simplifies and accelerates 3D LiDAR integration in autonomous systems. This is the 1st fully software solution that provides a LiDAR based localization stack fitting the needs of the industry in terms of robustness. Requiring no LiDAR expertise and cost-effective, this powerful software is sensor agnostic and adapted to any mobile robotics use case, such as autonomous delivery, vehicles or logistics. It achieves unseen robustness and precision levels to the nearest centimeter through cutting-edge innovations in optimization and 3D vision. Thanks to Exwayz SLAM, mobile robot intelligence becomes reality by integrating advanced 3D perception. Welcome to the new era of robot mobility!

● Addresses the technical challenge of autonomous navigation

● Versatile: works both indoor and outdoor in GPS-denied configuration

● Accurate: Positioning precision (less than 2cm)

● Available as a SDK for seamless integration

● Already trusted by leading LiDAR manufacturers used by industry leaders like Innoviz, Cepton, and Ouster

The global industry will require 100 million autonomous mobile robots per year by 2030[1], whether it is for handling, industrial inspection, delivery or autonomous transportation. The major challenge is providing advanced 3D perception, which is the essential prerequisite for autonomy. More specifically, a robot needs before everything to know its accurate position in its environment.

LiDAR, an obvious but inaccessible solution for autonomous navigation

LiDAR has quickly established itself as THE perception sensor for autonomous robotics, thanks to its ability to provide accurate 3D representation (i.e. scans) of the environment, even under adverse weather conditions such as no-light or fog and in GPS denied environments. However, the realities of implementing a LiDAR-based solution for real world use cases can still be daunting. LiDAR data processing has been a drastic challenge for years, even experienced engineering teams are challenged when it must be done in real-time, and this is the case for the vast majority of robotic use cases. This makes LiDAR highly inaccessible, despite gathering a lot of focus in the past few years and being admittedly the best solution for accurate navigation in various environments. Exwayz solution is today a game-changer for those integrating LiDAR sensors to their system.

Hassan Bouchiba, CEO of Exwayz, says, “We are proud to introduce this year at CES our new software Exwayz SLAM, aimed at saving years in hard software development to autonomous system manufacturers. LiDAR SLAM, enabling self positioning and mapping has been talked about a lot in recent years, both in academic research and on industrial projects. The reality is that autonomy can only happen with robust, accurate, reliable and truly real-time algorithms, which are the critical lacking elements in currently available solutions. We change the game by bringing Exwayz SLAM on the market, the first software that fits the industry needs and that will perform as good on your own system as on our Youtube videos”

Exwayz SLAM is already guiding true autonomous systems

Sensor agnostic and plug-and-play, Exwayz SLAM drastically simplifies the use of 3D LiDAR sensors for any mobile robotic application. Robots manufacturers can rely on Exwayz software to carry out a 2cm accurate self-localization for their systems, without any expertise in 3D data processing.

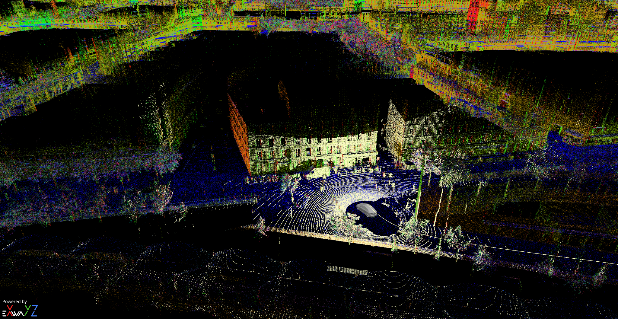

Example of autonomous relocalization with Exwayz SLAM : a first 3D map (in colors) was created with Exwayz SLAM algorithm. The current LiDAR data (in white) is being relocalized on the map, that is why there is a perfect overlap on the map. This allows to compute the trajectory of the system (white line), i.e. the exact position and orientation of the system at each moment.

The Exwayz SLAM is already deployed on several robots around the world in various use-cases.

For example, some picking robots are carrying parcels 24/7 in a large logistic warehouse and find their way thanks to Exwayz SLAM. Companies from the logistic field are eager to delegate these thankless tasks to robots, as they struggle to find people to conduct them otherwise. Robots are also useful for inspecting inaccessible or hazardous areas. Exwayz SLAM is for example used to navigate some robots in radioactive zones to lead measures campaigns in order to detect contamination risks for humans. Another meaningful example where the SLAM is making a contribution is autonomous transportation. We all know about big names of this field, but there are many smaller players that are developing the autonomous transportation of tomorrow; and not only cars: trains also. And Exwayz SLAM currently accelerates some innovative companies to reach their maturity by providing them the right tools.

As dense 3D point clouds are a byproduct of the SLAM, a variety of geospatial players use Exwayz software to produce 3D centimetric representations of infrastructures, these are essential for creating digital twins and thus are a wide help for accurate asset management.

Multiple partnerships with the leading 3D LiDAR manufacturers and software providers

Exwayz has already signed strategic partnerships with leading 3D LiDAR sensor manufacturers such as Ouster, Cepton and Innoviz to accelerate the development of autonomous robots, combining the best hardware and software technologies for tomorrow's intelligent systems. Exwayz is also partnering with dSpace and Intempora, two major players in the embedded software industry, to enable even simpler and safer integration of its Exwayz SLAM software at their customers' sites.

How it works: how can SLAM help a robot to navigate?

3D LiDAR SLAM (Simultaneous localization and mapping) is a set of algorithms aimed at computing the position of the sensor by matching consecutive scans one with another. The output of a LiDAR SLAM algorithm is usually a trajectory, i.e. the 3D position and orientation of the sensor at each moment, and a 3D point cloud, that stems from the aggregation of each scan. As it only relies on geometric matching, these algorithms are perfectly adapted for computing the position and orientation of a robotic system in poor GPS configurations such as indoor places (warehouses, parkings etc), or areas surrounded by high structures like urban environments.

Theoretically, a LiDAR can be used to create a 3D map of the area where a robot should operate, and to then relocalize the robot within this map by matching what it “sees” currently with the 3D map. In the end, it allows you to get exactly the same data as with a GPS (position and orientation in a map), but way more precisely, 20 times per second, and in all kinds of environments.

In practice, the problem is thorny: implementing an industrial grade SLAM and relocalization requires innovations for being adapted to the robot manufacturer LiDAR configuration, to behave well with the robot’s specific motion and for being a reliable source of positioning, in places where no other subsystem could help. This is precisely where Exwayz SLAM steps in.

About Exwayz

Founded in 2021, Exwayz is a French startup currently based at Station F in the HEC incubation program. Exwayz simplifies the integration of 3D LiDAR sensors in autonomous systems by providing embedded software for mobile robots. It is the first solution to offer navigation and detection, classification and tracking of objects and obstacles in real time. The Exwayz solution is compatible with all 3D LiDAR sensors on the market and is adapted to all mobile robotics use cases. It achieves unparalleled levels of robustness and accuracy through its cutting-edge innovations in simultaneous localization and mapping (SLAM) and 3D vision.

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product