Following the Prompts: Generative AI Powers Smarter Robots With NVIDIA Isaac Platform

Generative AI is reshaping trillion-dollar industries, and NVIDIA, a front-runner in smart robotics, is seizing the moment.

Speaking today as part of a special address ahead of CES, NVIDIA Vice President of Robotics and Edge Computing Deepu Talla detailed how NVIDIA and its partners are bringing generative AI and robotics together.

It’s a natural fit, with a growing roster of partners — including Boston Dynamics, Collaborative Robotics, Covariant, Sanctuary AI, Unitree Robotics and others — embracing GPU-accelerated large language models to bring unprecedented levels of intelligence and adaptability to machines of all kinds.

The timing couldn’t be better.

“Autonomous robots powered by artificial intelligence are being increasingly utilized for improving efficiency, decreasing costs and tackling labor shortages,” Talla said.

Present at the Creation

NVIDIA has been central to the generative AI revolution from the beginning.

A decade ago, NVIDIA founder and CEO Jensen Huang hand-delivered the first NVIDIA DGX AI supercomputer to OpenAI. Now, thanks to OpenAI’s ChatGPT, generative AI has become one of the fastest-growing technologies of our time.

And it’s just getting started.

The impact of generative AI will go beyond text and image generation — and into homes and offices, farms and factories, hospitals and laboratories, Talla predicted.

The key: LLMs, akin to the brain’s language center, will let robots understand and respond to human instructions more naturally.

Such machines will be able to learn continuously from humans, from each other and from the world around them.

“Given these attributes, generative AI is well-suited for robotics,” Talla said.

How Robots Are Using Generative AI

Agility Robotics, NTT, and others are incorporating generative AI into their robots to help them understand text or voice commands. Robot vacuum cleaners from Dreame Technology are being trained in simulated living spaces created by generative AI models. And Electric Sheep is developing a world model for autonomous lawn mowing.

NVIDIA technologies such as the NVIDIA Isaac and Jetson platforms, which facilitate the development and deployment of AI-powered robots, are already relied on by more than 1.2 million developers and 10,000 customers and partners.

Many of them are at CES this week, including Analog Devices, Aurora Labs, Canonical, Dreame Innovation Technology, DriveU, e-con Systems, Ecotron, Enchanted Tools, GluxKind, Hesai Technology, Leopard Imaging, Segway-Ninebot, Nodar, Orbbec, Qt Group, Robosense, Spartan Radar, TDK Corporation, Telit, Unitree Robotics, Voyant Photonics and ZVISION Technologies Co., Ltd.

Two Brains Are Better Than One

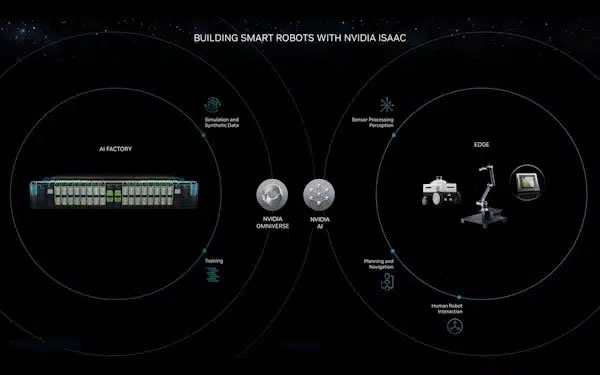

In his talk at CES, Talla showed the dual-computer model (below) essential for deploying AI in robotics, demonstrating NVIDIA’s comprehensive approach to AI development and application.

The first computer, referred to as an “AI factory,” is central to the creation and continuous improvement of AI models.

AI factories use NVIDIA’s data center compute infrastructure along with its AI and NVIDIA Omniverse platforms for the simulation and training of AI models.

The second computer represents the runtime environment of the robot.

This varies depending on the application: It could be in the cloud or a data center; in an on-premises server for tasks like defect inspection in semiconductor manufacturing; or within an autonomous machine equipped with multiple sensors and cameras.

Generating Quality Assets and Scenes

Talla also highlighted the role of LLMs in breaking down technical barriers, turning typical users into technical artists capable of creating complex robotics workcells or entire warehouse simulations.

With generative AI tools like NVIDIA Picasso, users can generate realistic 3D assets from simple text prompts and add them to digital scenes for dynamic and comprehensive robot training environments.

The same capability extends to creating diverse and physically accurate scenarios in Omniverse, enhancing the testing and training of robots to ensure real-world applicability.

This dovetails with the transformative potential of generative AI in reconfiguring the deployment of robots.

Traditionally, robots are purpose-built for specific tasks, and modifying them for different ones is a time-consuming process.

But advancements in LLMs and vision language models are eliminating this bottleneck, enabling more intuitive interactions with robots through natural language, Talla explained.

Such machines — adaptable and aware of the environment around them — will soon spill out across the world.

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product