Using 3D data for the autonomous robot.

Contributed by | IDS Imaging Development Systems

Until recently, robots were "blind" command receivers which followed predefined and fixed paths. Using 3D data, robots can adapt flexibly to the particular situation and react to their surroundings. A promise is becoming a reality. The robot is turning into an autonomous employee. Benefits: Fast retooling times, high variance of workpieces, simple teach-in, simplified part feeding with a consistently high degree of automation.

Each step in the process considered, all eventualities ruled out. Thanks to automation, large quantities of units can be produced extremely efficiently. A high degree of specialization further improves efficiency. However, this specialized but expensive equipment falls by the wayside when it comes to flexibility and rapid retooling, as it is simply not cost-effective to produce a small batch of alternative parts. Each step in the process would need to be adapted. Small batches are often laboriously produced and manufactured by hand. While this may be flexible and cost-saving, it is a slow and non-stable process.

Robots adapt according to the situation

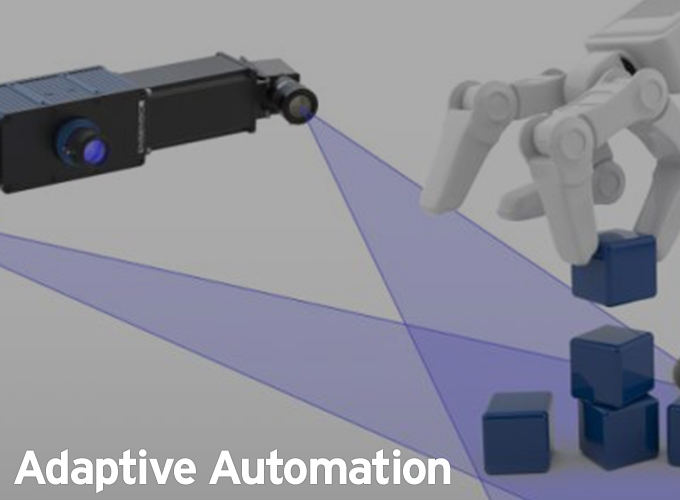

The development of 3D cameras and 3D-capable software has opened up opportunities for the industry to develop brand new machine vision technologies. Thanks to 3D vision, new tasks can be solved that were not possible with 2D.

One robot removes unsorted and overlapping t-pieces for a tube directly from a small transport box safely and reliably. Another robot depalletizes large aluminum parts directly onto a conveyor belt. The delicate movements of its robust gripper find a firm hold at the first attempt without the slightest collision with the workpiece. This is despite the fact that the parts on the used or dirty pallets are often skewed or leaning due to excess casting flash. Robotics has had to step up its game considerably for this bin picking and transfer of parts in the correct position.

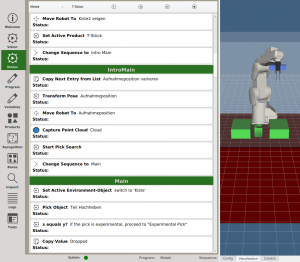

The user can change the order of the process steps easily using Mikado ARC

The Freiburg-based systems integration company, isys vision, has developed a solution for this called "MIKADO Adaptive Robot Control" (or ARC for short). It is a configurable robot control with its own collision-free path planning. It uses its own inverse kinematics to calculate the joint angles of the robotic arms for gripping positions or traverse paths. 3D information, such as the workpiece shape, position, location, or a virtual image of the surroundings, is used as the reference point for the complex calculations. A large number of robots available on the market can be controlled using MIKADO ARC and make time-consuming programming unnecessary. Parts can be changed quickly so that even small batches can be produced using this robot-assisted material handling.

3D cameras capture the situation

With a variable base line and a 100 W texture projector, working distances up to 5 meters can be achieved using stereo vision cameras of the Ensenso X series, allowing you to capture objects with volumes of several cubic meters.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

IDS Imaging Development Systems Inc.

World-class image processing and industrial cameras "Made in Germany". Machine vision systems from IDS are powerful and easy to use. IDS is a leading provider of area scan cameras with USB and GigE interfaces, 3D industrial cameras and industrial cameras with artificial intelligence. Industrial monitoring cameras with streaming and event recording complete the portfolio. One of IDS's key strengths is customized solutions. An experienced project team of hardware and software developers makes almost anything technically possible to meet individual specifications - from custom design and PCB electronics to specific connector configurations. Whether in an industrial or non-industrial setting: IDS cameras and sensors assist companies worldwide in optimizing processes, ensuring quality, driving research, conserving raw materials, and serving people. They provide reliability, efficiency and flexibility for your application.

Other Articles

Inspection of critical infrastructure using intelligent drones

Multi-camera system with AI and seamless traceability leaves no chance for product defects

Automate 2025 Q&A with IDS Imaging

More about IDS Imaging Development Systems Inc.

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product