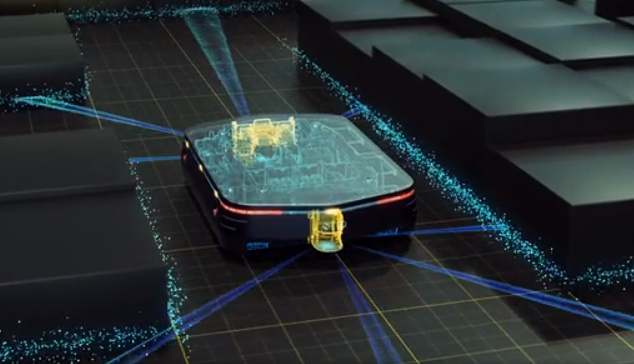

OTTO self-driving vehicles depend on three different sensors to identify its current position as well as to navigate where and how it needs to travel from point A to point B. As in any dense and dynamic environment, elements in OTTOs path can change minute to minute

How Industrial Self-driving Vehicles Use Sensors for Localization and Mapping

How Industrial Self-driving Vehicles Use Sensors for Localization and Mapping

Rebecca Mayville | OTTO Motors

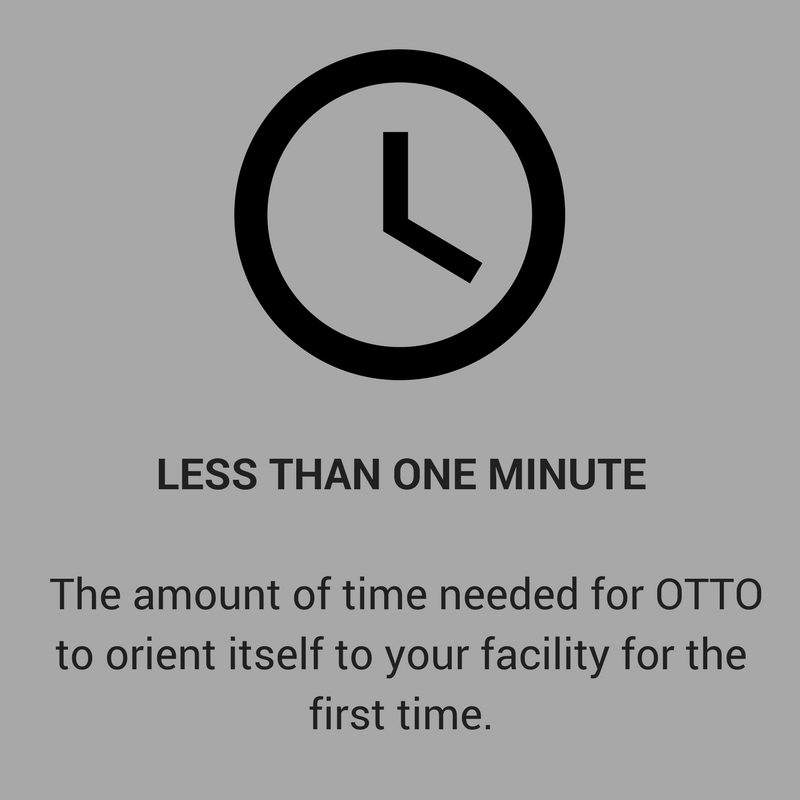

Any investment you make in your operations comes with an expectation that it is going to start providing value back into your business as soon as possible. Reliability and accuracy are crucial to running a production environment where every second counts. Automation solutions like OTTO self-driving vehicles can quickly start contributing to your culture of growth with sensor fusion technology that allows the vehicle to know immediately where it is and where it’s going.

When a new employee steps onto your shop floor, they’re able to look around, ask questions, get visual and audio cues or directions as to where they need to go to do their work. Getting that new employee ramped up, to where they are making a positive impact on production, can be very time and resource intensive. And that is if you can find that new employee in the first place.

Manufacturers across the world are experiencing some of the most extensive labor shortages in history. Many of the manufacturers we’ve talked to speak of their own situation as a “labor crisis”. These manufacturers have work to be done but cannot find the employees to do it. And so, they have turned to industrial self-driving vehicles, like OTTO, to complete the material movement so critical to their operation.

But whether a manufacturer is investing in human labor or automation, the expectations remain high. The resource needs to be able to start impacting the business in a positive way as soon as possible. Self-driving vehicles like OTTO are designed to make an almost immediate impact.

The Sensor Fusion of Self-Driving Vehicles

How does an OTTO self-driving vehicle compare to a new employee entering a workplace for the first time? How does that self-driving vehicle navigate through the dense and dynamic environments of industrial spaces?

To drive through the aisles on your shop floor or distribution center, OTTO self-driving vehicles rely on a series of sensors that help the vehicles understand the environment in which they are traveling in real time. By using a combination of sensor types, each of which serves a distinct purpose, the vehicle is able to map out the way ahead and “see” where it’s going. The technology that allows this to happen is called sensor fusion. It combines data collected from multiple sensors to get an accurate and reliable view of the vehicle’s surroundings.

Where They Are & Where They’re Going

OTTO self-driving vehicles depend on three different sensors to identify its current position as well as to navigate where and how it needs to travel from point A to point B. As in any dense and dynamic environment, elements in OTTO’s path can change minute to minute but with the technology of sensor fusion, OTTO is able to make immediate adjustments to travel patterns, always taking the safest, most efficient route.

IMU

IMU stands for Inertial Measurement Unit and is an electronic use in inertial navigation systems used in aircraft, satellites and unmanned systems. The data collected and reported by an IMU is processed to calculate current position based on velocity and time.

“The IMU sensor tells OTTO if it's moving forwards, backwards, or turning and fuses that information against what it can see and feel. Just like sitting in an iMax movie can feel like you're soaring in the sky, your body knows you're not, so you won't panic.”

James Servos, Perception Manager, OTTO Motors

LiDAR

The primary "vision" unit on the autonomous vehicle is a LiDAR system, short for Light Detection and Ranging. To enable the split-second decision-making needed for self-driving cars, the LiDAR system provides accurate 3D information on the surrounding environment. Using this data, the processor implements object identification, collision prediction, and avoidance strategies. The LiDAR unit is well-suited to "big picture" imaging and provides the needed surrounding view by using a rotating, scanning mirror assembly on the top of the car.

“Using LiDAR to map the facility provides a source of absolute position estimation in an indoor facility. It provides our position to the fixed environment, such as walls and allows OTTO to maintain its precise position anywhere in the facility.”

James Servos, Perception Manager, OTTO Motors

WHEEL ENCODER

The encoder is a sensor attached to a rotating object (such as a wheel in the case of OTTO) to measure rotation. By measuring rotation, OTTO can do things such as determine velocity and acceleration

“An encoder is essentially a circle that rotates with the wheel, except that it has slits or holes cut into it. When the laser shines, the sensor knows if the laser is hitting it. As the wheel turns, it will cut off that laser and the sensor will see nothing. By doing that, wheel rotation can be measured precisely. Fed back into the software and OTTO can see exactly where it is.”

James Servos, Perception Manager, OTTO Motors

OTTO Always Finds a Way

The sensors employed by every OTTO self-driving vehicle are designed to ensure accuracy and reliability are met with each mission OTTO is assigned. Navigating through the dense and dynamic environments of a manufacturing facility, warehouse or distribution center takes precision and reliability and OTTO provides that with industry-leading sensor fusion capabilities. Automation is solving two challenges that today’s manufacturers are dealing with – a significant labor crisis and the urgency to compete on a global scale at an ever-increasing pace.

Published with permission from the OTTO MOTORS blog.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product