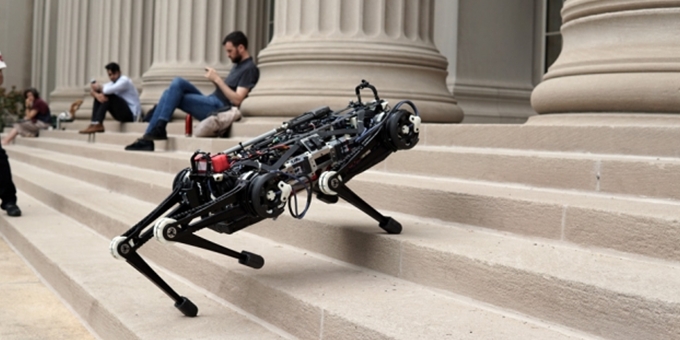

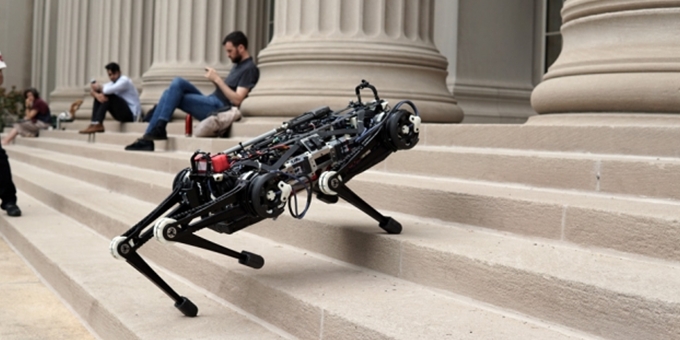

Given the name Cheetah 3 by its developers, the robot, which weighs less than 100 pounds, is roughly the size and shape of a medium-sized dog. It's the ideal solution for crawling through narrow spaces, traversing dangerous obstacles and inspecting inaccessible areas.

MIT Created a Blind Robot That Can Run and Jump

MIT Created a Blind Robot That Can Run and Jump

Kayla Matthews | Productivity Bytes

Developers at MIT are known for projects that push the boundaries of what modern technology can accomplish — and their recent invention is no exception. Their latest creation, a blind robot that can navigate stairs and inaccessible or dangerous areas, is already touted for its usefulness in factory settings and during emergency response procedures.

Navigating Without Vision

Given the name Cheetah 3 by its developers, the robot, which weighs less than 100 pounds, is roughly the size and shape of a medium-sized dog. It's the ideal solution for crawling through narrow spaces, traversing dangerous obstacles and inspecting remote or inaccessible areas.

But this isn't the first time robots have been used in such a capacity. Not only are there unmanned and remote-controlled vehicles already available, but some aerial drones offer much of this same functionality from the skies above.

What makes Cheetah 3 unique is the ability to function without vision. In robotic terms, this feature means that it doesn't include integrated video — which is typically used for robotic navigation and inspection. Cheetah 3 relies on multiple sensors that collect data with every step. The information is then processed in real-time and utilized by built-in artificial intelligence (AI) to visit assigned areas, avoid unexpected obstacles, climb stairs and more.

The original developers are confident that their blind robot is every bit as effective as its predecessors. Incoming video signals are subject to noise and interference that can result in an inaccurate or incomplete picture. Some might even have difficulty maintaining a consistent signal with the host.

Integrated cameras are also limited in what they see. Most have a small field of view that doesn't always capture small or nearby objects. Current-gen robotics are sometimes obstructed or damaged by small obstacles that were never even detected.

Powered by Highly Advanced AI

Instead of relying on this incoming video signal, Cheetah 3 uses its sensors in combination with two distinct forms of AI: a contact detection algorithm and a model-predictive control algorithm.

The first AI-driven process, the contact detection algorithm, kicks in whenever a sensor detects an object — whether it’s exposed ground, a solid surface, a stairway or an obstacle. The robot is designed to react differently to different materials and substances for maximum efficiency and safety.

It also plays a role in the robot's movement by determining how to manipulate its four robotic legs. The AI programming does so by calculating the probability of each leg hitting the ground, the estimated force and the expected position of the leg while in mid-swing.

The model-predictive control algorithm steps in to facilitate the remainder of the process. By predicting the location of the robot a half-second in the future — and according to the possible force on each one of its four legs — it can make the adjustments needed to maintain balance. Cheetah 3 makes calculations for the model-predictive control algorithm every 50 milliseconds, which equates to 20 times every second. The device excelled in the lab by surviving the engineers' kicks, pushes and pulls.

Although it passed laboratory tests with flying colors, Cheetah 3 has yet to hit the market. Developers will host the official unveil for the Cheetah 3 in October at the International Conference on Intelligent Robots in Madrid, Spain, but it's unclear when it will be available.

A Collaborative Effort

While MIT developers headed the brunt of the project, they did receive some help. Organizations like Toyota Research Institute, Air Force Office of Scientific Research, Foxconn and Naver all provided their support to the initial research, design and development of Cheetah 3.

* Image via MIT

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Featured Product