The app forms the second phase of the project and - generally speaking - is intended to enable players to play against a simulated computer opponent. It builds on the trained AI and uses its results further.

Robot plays "Rock, Paper, Scissors" - Part 2/3

Case Study from | IDS Imaging

A vision app as a simulated computer opponent

How do you get a robot to play "Rock, Paper, Scissors"? Sebastian Trella - robotics fan and  blogger - has now come a decisive step closer to solving the puzzle. On the camera side, he used IDS NXT, a complete system for the use of intelligent cameras. The system covers the entire workflow from capturing and labelling training images to training the networks, creating apps for evaluation and actually running applications. In part 1 of our follow-up story, he had already implemented gesture recognition using AI-based image processing and thus also trained the neural networks. The further processing of the recognized gestures could be carried out by means of a specially created vision app.

blogger - has now come a decisive step closer to solving the puzzle. On the camera side, he used IDS NXT, a complete system for the use of intelligent cameras. The system covers the entire workflow from capturing and labelling training images to training the networks, creating apps for evaluation and actually running applications. In part 1 of our follow-up story, he had already implemented gesture recognition using AI-based image processing and thus also trained the neural networks. The further processing of the recognized gestures could be carried out by means of a specially created vision app.

Further processing of the analyzed image data

The app forms the second phase of the project and - generally speaking - is intended to enable players to play against a simulated computer opponent. It builds on the trained AI and uses its results further. Additionally, it places the AI opponent, who randomly "outputs" one of the three predefined hand movements and compares it with that of the player. She then decides who has won or whether there is a draw. The vision app is therefore the interface to the player on the computer monitor, while the camera is the interface for capturing the player's gestures.

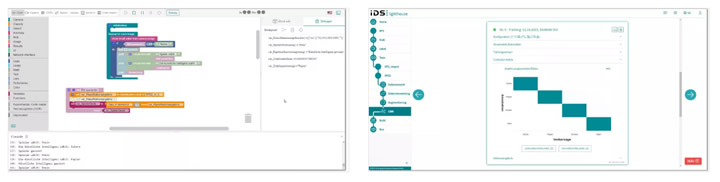

The app creation took place in the cloud-based AI vision studio IDS lighthouse, as did the training of the neural networks. The block-based code editor, which is similar to the free, graphical programming environment Scratch, among others, made it easy for Sebastian Trella: "I was already familiar with vision app programming with Scratch/Blockly from LEGO® MINDSTORMS® and various other robotics products and I found my way around immediately. The programming interface is practically identical and I was therefore already familiar with the required way of thinking. Because whether I'm developing an AI-supported vision app on an IDS NXT camera or a motion sequence for a robot, the programming works in exactly the same way."

"Fine-tuning" directly on the camera

However, Trella was new to displaying text on images: "The robots I have programmed so far have only ever delivered output via a console. Integrating the output of the vision app directly into the camera image was a new approach for me." He was particularly surprised by the ability to edit the vision app both in the cloud and on the camera itself - but ultimately also by how convenient it was to develop on the device and how well the camera hardware performed: "Small changes to the program code can be tested directly on the camera without having to recompile everything in the cloud. The programming environment runs very smoothly and stably." However, he still sees room for improvement when debugging errors on the embedded device - especially with regard to the synchronization of the embedded device and cloud system following adjustments on the camera.

Trella discovered a real plus point, which he said he found "great", on the camera's website interface. This is where you will find the Swagger UI - a collection of open source tools for documenting and testing the integrated REST interface - including examples. That made his work easier. In this context, he also formulates some suggestions for future developments of the IDS NXT system: "It would be great to have switchable modules for communication with 3rd party robot systems so that the robot arm can be "co-programmed" directly from the vision app programming environment. This saves cabling between the robot and camera and simplifies development. Apart from that, I missed the import of image files directly via the programming environment - so far, this has only been possible via FTP. In my app, for example, I would then have displayed a picture of a trophy for the winner."

What's next?

"Building the vision app was great fun and I would like to thank you very much for the great opportunity to "play" with such interesting hardware," says Sebastian Trella. The next step is to take a closer look at the options for communicating between the vision app and the robot arm and try them out. The virtual computer opponent should not only display his gesture on the screen - i.e. in the camera image - but also in real life through the robot arm. This step is also the last step towards the finished "rock, paper, scissors" game: The robot is brought to life.

To be continued...

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

IDS Imaging Development Systems Inc.

World-class image processing and industrial cameras "Made in Germany". Machine vision systems from IDS are powerful and easy to use. IDS is a leading provider of area scan cameras with USB and GigE interfaces, 3D industrial cameras and industrial cameras with artificial intelligence. Industrial monitoring cameras with streaming and event recording complete the portfolio. One of IDS's key strengths is customized solutions. An experienced project team of hardware and software developers makes almost anything technically possible to meet individual specifications - from custom design and PCB electronics to specific connector configurations. Whether in an industrial or non-industrial setting: IDS cameras and sensors assist companies worldwide in optimizing processes, ensuring quality, driving research, conserving raw materials, and serving people. They provide reliability, efficiency and flexibility for your application.

Other Articles

Inspection of critical infrastructure using intelligent drones

Multi-camera system with AI and seamless traceability leaves no chance for product defects

Automate 2025 Q&A with IDS Imaging

More about IDS Imaging Development Systems Inc.

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product