The blog explains how these cameras enable precise maneuvering, reduce manual intervention, and enhance safety in various embedded vision applications.

Prabu Kumar | e-con Systems

Autonomous vehicles, such as warehouse robots, rely on precise maneuvering. NVIDIA Jetson AGX Orin™-powered surround-view cameras provide a perfectly synchronized solution, allowing these robots to move freely within designated areas without requiring intensive manual intervention. The blog explains how these cameras enable precise maneuvering, reduce manual intervention, and enhance safety in various embedded vision applications.

The performance of autonomous vehicles like warehouse robots depends on their ability to maneuver accurately within a specified location, no matter the size. But reality tells a harsher story. Hence, if a robot gets stuck in some corner of the warehouse, rectifying the situation can be cumbersome. First, the nearest human operator has to be contacted. Then, they have to physically locate the robot. Finally, they have to manually place it back in the right position.

But now, thanks to NVIDIA Jetson AGX Orin™, powerful surround-view camera solutions can be developed and customized to effortlessly overcome situations like this with advanced image stitching workflows. So, when the robot gets stuck, the human operator can quickly log in to the web console and help correctly maneuver the robot without leaving their location. Thereby, autonomous vehicles like warehouse robots, robo taxis, self-driving trucks, smart tractors, etc., can freely move within specified locations without intensive manual efforts.

Surround-view cameras powered by NVIDIA Jetson AGX Orin™

Leveraging NVIDIA Jetson AGX Orin™, a surround-view multi-camera solution, which can stream videos without any motion blur. In a nutshell, it is changing how autonomous robots see, think and maneuver with a perfectly synchronized solution.

For instance, e-con Systems has built ArniCAM20, a surround-view camera solution based on NVIDIA Jetson AGX Orin™. It follows a proprietary approach that helps capture and generate a stitched image from multiple cameras through a series of post-processing operations. A critical aspect of the stitching process is image correction, of which there are two types: geometric and photometric.

(Fig: Bird’s eye view stitched image)

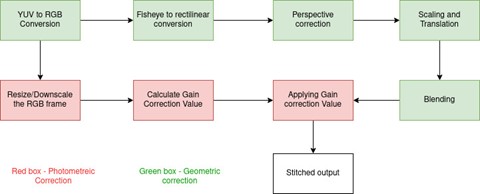

(Fig: Flow chart of bird’s eye view generation)

How does geometric correction work?

- Transformation from fisheye to rectilinear projection conversion: This initial step involves converting the full-frame fisheye view into a rectilinear projection. This is crucial for normalizing the distortion inherent in fisheye lenses and preparing the image for further processing stages.

- Perspective correction: The basic distortion in the image is removed in the stage of fisheye to rectilinear conversion. By perspective correction, we mean to change the viewing angle of the camera or extrinsic of the camera (roll, pitch, yaw). The perspective correction is done by applying the image over a sphere, and the image is rotated in a spherical coordinate system. Here, we change the viewing angle in software such that the image is captured in a top-down view.

- Image scaling & translation along the X and Y axes: In this stage, we scale each undistorted rectified camera image in both x and y directions (zoom in and out) to bring them all in a common coordinate system. After scaling, we perform the translation to achieve a seamless transition between two images in the overlap region.

- Seamless image blending: The final stage in geometric correction is the seamless blending of the four images in the overlap region. Blending is the process where, in the overlap region, the pixel color values are taken from both the images in a certain proportion and added together.

How does photometric correction work?

- The bird’s eye view uses the input from multiple cameras. Each camera has different scene illumination, camera AE, and AWB. Therefore, the composite image suffers from serious brightness and color inconsistency across different views, resulting in noticeable stitching boundaries. For photometric correction, we have to correct the color and the intensity values to be even among all the cameras.

- Our approach is to use a linear model to correct for the photometric misalignment. In step 1, we select data from each input frame and compute statistics to be used for analyzing photometric misalignment. In Step 2, we calculate a gain correction value for each color channel and each view from these samples. Finally, in step 3, the calculated gain correction value was applied to each color channel and each view. To apply the correction, one camera is taken as master, and the rest of the cameras are taken as slave, and the master correction data is applied to the slave.

- In order to reduce the impact of small details captured by the camera and also to reduce the computation complexity, we downsample each overlapping region by block averaging. Then, downsampled images are used to calculate photometric misalignment.

Importance of Jetson in Implementation

The solution implementation should leverage custom shaders in OpenGL for photometric and geometric corrections. NVIDIA’s optimized Pixel Buffer Objects (PBO) can be leveraged for efficient frame transfer from kernel drivers to OpenGL textures, enabling asynchronous and high-speed frame transfer. It would result in enhanced frames per second (fps) performance.

The code block shown below is the part where we map the pixel buffer object to the v4l2 user pointers.

![]()

In the above code, the “ptr” variable is given as the user pointer to the v4l2. Loading the frame from the Pixel Buffer Object to the OpenGL texture will happen asynchronously in the background. So, a buffer copy time is reduced here, enabling a higher frame rate. If this pixel buffer object is not used, then the camera frame will be passed to the v4l2 user pointer, and then the user space buffer will be loaded to the texture sequentially.

The next important element that makes the bird’s eye view pipeline easier is the nveglstreamsrc. The camera frames are passed to OpenGL shaders, which outputs the bird’s eye view stitched frame. The output framebuffer is copied to the egl stream producer, which in turn passes the frame to nveglstreamsrc (a gstreamer plugin). Since the stitched frames are integrated with gstreamers src plugin, further integration of deepstream on the bird’s eye view frame becomes easier. Also, this makes it simple to send the frame over the network to a remote device.

The above function call swaps the OpenGL framebuffer output to egl.

Advantages of NVIDIA Jetson AGX Orin™-based surround-view cameras

- Surround-view cameras significantly improve operational efficiency in contactless transport and delivery. This technology streamlines logistics, making processes faster and more reliable.

- These cameras enable employees to focus on high-value tasks, reducing the need for manual intervention. This shift increases productivity and job satisfaction by eliminating repetitive tasks.

- The integration of these cameras accelerates the acceptance of autonomous vehicles and robots. Their user-friendly vision solutions make adoption easier and more appealing.

- Businesses can automate essential operations with confidence, knowing these cameras minimize disruptions. This automation ensures smoother, more continuous business processes.

- Incorporating autonomous robots into daily operations leads to notable cost savings. This technology optimizes resource use, making operations more economically efficient.

- Surround-view cameras open up new remote work opportunities for individuals with mobility issues. They enable a more inclusive workforce, allowing participation from various locations.

- The technology creates opportunities for workers to transition to skilled roles. This shift reduces the burden of tedious tasks, enhancing job fulfillment.

- Logistics operations become more efficient and less time-consuming with these cameras. They simplify the management and movement of goods, saving time and energy.

- Implementing these cameras in workplaces significantly improves safety and security. The use of remotely controlled robots reduces risks and enhances monitoring capabilities.

Please check out our 360° Surround view camera.

Prabu Kumar is the Chief Technology Officer and Head of Camera Products at e-con Systems, and comes with a rich experience of more than 15 years in the embedded vision space. He brings to the table a deep knowledge in USB cameras, embedded vision cameras, vision algorithms and FPGAs. He has built 50+ camera solutions spanning various domains such as medical, industrial, agriculture, retail, biometrics, and more. He also comes with expertise in device driver development and BSP development. Currently, Prabu’s focus is to build smart camera solutions that power new age AI based applications.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

e-con Systems

Since 2003, e-con Systems® has been designing, developing & manufacturing custom and off-the-shelf OEM camera solutions. Backed by a large in-house team of experts, our cameras are currently embedded in 350+ customer products. So far, we have shipped over 2 million cameras to the USA, Europe, Japan, South Korea, & more. Our portfolio includes MIPI, GMSL, GigE, USB cameras, ToF & Stereo cameras & smart AI cameras. They come with industry-leading features, including low-light performance, HDR, global shutter, LED flicker mitigation, multi-camera synchronization, & IP69K-rated enclosure. e-con Systems® also offers TintE™ - an FPGA-based ISP with all necessary imaging pipeline components. It provides a full ISP pipeline as a turnkey solution, featuring optimized, customizable blocks like debayering, AWB, AE, gamma correction and more. Our powerful partner ecosystem, comprising sensor partners, ISP partners, carrier board partners, & more, enables us to offer end-to-end vision solutions.

Other Articles

Deep Dive into integration challenges of ONVIF-compliant GigE cameras and how ONVIF enhances interoperability

Importance of Global Shutter Cameras for Industrial Automation Systems

What Is The Role of Embedded Cameras in Smart Warehouse Automation?

More about e-con Systems

Featured Product