We're still a long way from the harmonious human-machine collaboration imagined in Isaac Asimov's stories. Getting there will require autonomous systems to perform reliably and safely, not just in controlled lab settings, but in messy, unpredictable real-world environments.

The Real Barrier to Trusting Robots? It Comes Down to Control

Andrew Singletary, Co-founder and CEO | 3Laws Robotics

In the 1940s, science fiction writer Isaac Asimov imagined a world where intelligent machines followed three simple rules: don’t harm humans, obey orders, and protect themselves. Eighty years later, AI-powered systems are moving from fiction into the real world, making those imagined rules feel more relevant than ever.

But in reality, where uncertainty is inevitable, the question becomes: how should a robot behave in environments that are messy, unpredictable, and unscripted?

To answer that, we need to turn to math.

Perception: Necessary But Not Sufficient

If we want people to fully trust robots in shared spaces—on sidewalks, at job sites, or even in hospital corridors—these systems must be consistently reliable and unquestionably safe. Yet when we talk about robotics safety, the conversation often centers on sensors and perception (how robots interpret their surroundings and decide how to act). After all, if a robot can’t detect an obstacle, how can it avoid it?

But perception is inherently uncertain. Sensors can be obstructed and unexpected situations are inevitable. No robot will ever have a perfect view of everything. And trying to eliminate every source of uncertainty quickly becomes an endless game of whack-a-mole: chasing down every edge case and blind spot.

For users, a lack of safety is a dealbreaker—and rightfully so. If people can’t trust a system to make the right decision when it matters most, they simply won’t use it. That’s why, as robots move into more complex, real-world environments, the goal shouldn’t be to see everything. It should be to act safely, even when there are some unknowns.

A fundamental challenge in perception is the ability to distinguish between static obstacles (e.g., walls, and dynamic obstacles (e.g., people and other robots). Because of this, the industry standard is to assume that every point in a point cloud or every grid in an occupancy grid is moving at the robot at max speed. This conservative assumption severely limits how fast or close a robot can move near walls or objects.

One new approach is adding in a fundamentally safe perception layer that relaxes that assumption. Rather than always assuming the worst case, real-time analytics determine the range of possible velocities for each point or object in a scene. The robot only needs to stay safe with respect to that range of possible velocities, not the worst-case assumption. This allows for significantly more performance than the current approaches.

Here’s a video of how this layer works.

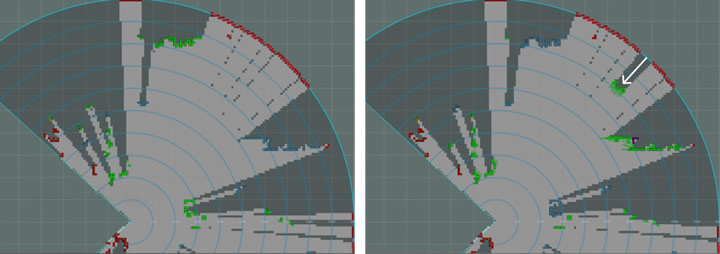

Each occupancy grid has a range of hypotheses for the possible velocities. As objects start to move in the scene (as shown in the top right) their velocities are estimated (shown by the small green arrows) and the confidence in those velocities are increased (shown by moving from red to green). Objects that were previously identified and are no longer moving are shown in blue.

In the end, trust can’t come from perception alone. It also requires smarter, more resilient control on what a robot can and can’t do. And this control needs to account for all of these perception possibilities.

The Math Behind Safe, Real-World Decisions

Ensuring true safety means defining a set of behaviors a system must adhere to, no matter the situation. But here’s the catch: many so-called “safe” boundaries aren’t truly safe. Take a truck following another vehicle at a 10-foot distance. That might seem safe—until you factor in speed and braking capability. In reality, a safe following distance also must take into account velocity and braking performance.

That’s why we can’t define safety based solely on the environment. We also have to account for what the system is actually capable of doing. After all, how can we expect a robot to behave safely if we haven’t first defined the limits of its abilities?

Building this kind of safety framework isn’t easy. It requires managing uncertainty such as noisy sensor inputs, communication delays, and unpredictable conditions like slick roads or poor visibility. It also requires a deeper understanding of system dynamics—how the robot moves, reacts, and balances competing goals in real time. But this rigorous, proactive approach is what separates truly dependable systems from those that only seem safe under perfect conditions.

Rethinking the Path to Integrated Robotics

While we’ve made meaningful progress, we’re still a long way from the harmonious human-machine collaboration imagined in Isaac Asimov’s stories.

Getting there will require autonomous systems to perform reliably and safely, not just in controlled lab settings, but in messy, unpredictable real-world environments. That means embracing uncertainty and designing robots capable of making smart, safe decisions even with incomplete information.

Ultimately, the future of autonomy won’t depend on seeing everything—it will depend on making the right decision when it can’t.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Featured Product