Algorithm that harnesses data from a new sensor could make autonomous robots more nimble.

MIT paper from Andrea Censi and Davide Scaramuzza:

The agility of a robotic system is ultimately limited by the speed of its processing pipeline. The use of a Dynamic Vision Sensors (DVS), a sensor producing asynchronous events as luminance changes are perceived by its pixels, makes it possible to have a sensing pipeline of a theoretical latency of a few microseconds. However, several challenges must be overcome: a DVS does not provide the grayscale value but only changes in the luminance; and because the output is composed by a sequence of events, traditional frame-based visual odometry methods are not applicable. This paper presents the first visual odometry system based on a DVS plus a normal CMOS camera to provide the absolute brightness values. The two sources of data are automatically spatiotemporally calibrated from logs taken during normal operation. We design a visual odometry method that uses the DVS events to estimate the relative displacement since the previous CMOS frame by processing each event individually. Experiments show that the rotation can be estimated with surprising accuracy, while the translation can be estimated only very noisily, because it produces few events due to very small apparent motion... (full paper)

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

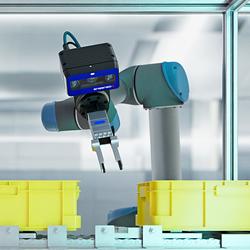

Featured Product