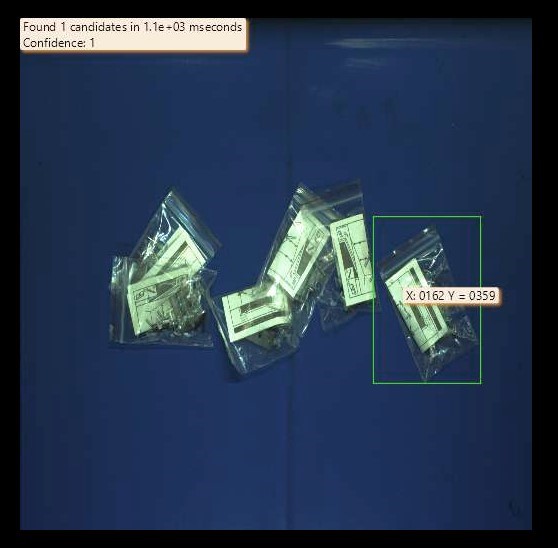

60 Plastic bags per minute perfectly positioned

Machine vision reliably detects plastic bags in the capture area to enable the robot to grip them precisely. Source: TEKVISA

For fully automated pick-and-place applications, it is important that robots can reliably grip differently shaped and translucent objects, as well as objects with complex surfaces. In powerful machine vision software, this is made possible through deep learning methods. TEKVISA has implemented such a demanding application with the support of the MVTec HALCON machine vision software.

Plastic bags with assembly components can come in many different shapes. In industrial processes, it is therefore often difficult to precisely identify and automatically grip such bags, especially if they lie in disorder and/or are made of translucent material. As a result, productivity in pick-and-place activities suffers. TEKVISA has developed a solution to master this challenge with the help of machine vision.

TEKVISA ENGINEERING, with locations in Spain and Portugal, is specializing in the devopment of digital inspection systems for quality control and process automation in industrial environments based on state-of-the-art machine vision technologies. Since its foundation, TEKVISA has pursued the goal of developing and launching particularly user-friendly and advanced systems for a wide range of industries, such as automotive and food. The company has more than 14 years of experience with machine vision systems. In addition to deep-learning-based inspection solutions, TEKVISA also develops sophisticated robotics and bin-picking applications.

Precisely identifying and positioning accessory bags

The automation specialist has developed a robot-assisted picking system based on machine vision with deep learning algorithms for a leading manufacturer of wallboards for offices. The objective was to precisely detect plastic bags filled with accessories for the wallboards in order to enable robots to reliably grab them. Previously, this task was performed purely manually, which was very time-consuming and therefore dragged out the entire process. In order to significantly increase productivity, the entire workflow should be fully automated. Another goal was to relieve the employees of the monotonous routine task of positioning the bags, so they would be available for more demanding processes.

A special challenge had to be taken into account when developing an appropriate automation solution: The bags contain a large variety of different types of accessories. These include, for example, fastening materials such as screws, nuts and dowels, pens and highlighters for writing on the whiteboards, pins for the cork wall panels or even sponges for wiping the wall surfaces. Consequently, the bags can vary in size, weight, and appearance. Additionally, they are randomly shaped and, because of their elasticity, may also be compressed, stretched, or deformed in some other way.

Mastering high product variance with machine vision

This enormously high product variance presented the engineers at TEKVISA with major challenges: The goal was to develop a flexible solution based on machine vision that reliably detects all conceivable types and shapes of accessory bags and thus enables safe gripping processes. An important feature that had to be considered was that the system should identify those bags on the conveyor belt that could best be picked up by the robot arm based on their position and orientation. "We knew from the very beginning that the solution could only consist of a clever combination of classic machine vision methods and artificial-intelligence-based technologies such as deep learning", explains Paulo Santos, co-founder and CTO of TEKVISA.

The system consists of a high-resolution color area scan camera and special lighting that minimizes reflections, paving the way for precise detection of each bag's contents. The centerpiece of the application is an innovative machine vision system. It precisely identifies the plastic bags lying on a conveyor belt, enabling a robot to pick them up accurately. In the next step, the robot places the bags with high precision on the respective wallboard, shortly before the final packaging process.

Combination of classic machine vision and deep learning

Based on the many different appearances and positions of the bags, the machine vision solution selects the optimal candidates for picking in each case. This is done by use of the machine vision standard software MVTec HALCON. The software has a library of over 2,100 operators, including the most modern and powerful deep learning methods. The deep learning method "Object Detection" is particulary useful for TEKVISA's requirements. First, the system is comprehensively trained with sample images using the deep learning algorithms it contains. This is how the software learns the numerous different characteristics the bags can have. This leads to a very robust recognition rate – even with an almost infinite variance of objects. The bags not selected for gripping are sorted out and then resupplied into the system. Through repositioning they then take a more favorable position on the conveyor, allowing the robot to pick them up more easily and place them for shipping. Even overlapping and stacked bags can be gripped and picked this way. The system is able to analyze and precisely identify up to 60 bags per minute using the integrated machine vision software.

Camera and robot harmonize perfectly thanks to hand-eye calibration

In addition to deep learning technologies, classic machine vision methods, which are also an integral part of MVTec HALCON, are responsible for the robust recognition rates. Hand-eye calibration is an important feature in addition to image acquisition and the various tools for pre-processing the images. This step is required in advance to enable the robot to accurately grip and place the bags observed by a stationary 2D camera during operation. During hand-eye calibration, a calibration plate is attached to the robot's gripper arm and brought into the camera's field of view. Several images with different robot positions are taken and offset against the robot's axis positions. The result is a "common" coordinate system for camera and robot. This allows the robot to grip the components at the positions that were detected immediately beforehand by the camera. The precise determination of the object position with an accuracy of 0.1 millimeters allows a success rate of 99.99 percent to be achieved during the gripping process.

"A large number of different bags with varying sizes, deformation and contents, as well as their overlapping positions on the conveyor belt – all these factors presented us with enormous challenges in this project. We were unable to address these with purely classic machine vision methods. MVTec HALCON, with its combination of traditional methods and modern deep learning technologies, has proven to be the ideal solution, enabling us to achieve outstanding detection rates despite the wide variety of objects. This paves the way for our goal - the end-to-end automation of the entire process surrounding the packaging of wallboards. Additionally, we benefit from increased productivity and flexibility, enabling us to address various scenarios within the same application", sums up Paulo Santos.

About MVTec Software GmbH

MVTec is a leading manufacturer of standard software for machine vision. MVTec products are used in a wide range of industries, such as semiconductor and electronics manufacturing, battery production, agriculture and food, as well as logistics. They enable applications like surface inspection, optical quality control, robot guidance, identification, measurement, classification, and more. By providing modern technologies such as 3D vision, deep learning, and embedded vision, software by MVTec also enables new automation solutions for the Industrial Internet of Things aka Industry 4.0. With locations in Germany, the USA, France, China, and Taiwan, as well as an established network of international distributors, MVTec is represented in more than 35 countries worldwide. www.mvtec.com

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product