Sensing is very important for robots to perform the functions that they were developed to execute. Sensing capabilities, such as sight, touch, and hearing are making robots appear more human. These capabilities are available because of algorithms that require feedback.

Len Calderone

Robots can’t actually see, hear, or feel the way we do, but by utilizing sensors, they can gather and register information about the environment around them. A robot can appear to respond to its environment due to the software programs that can interpret sensor information.

As an example, a sensor measures the distance between the robot and other objects, sending the information from the sensor to a computer that uses these measurements in programs. The computer can then instruct the robot to stop if it is too close to an object or avoid that object as it moves in that direction.

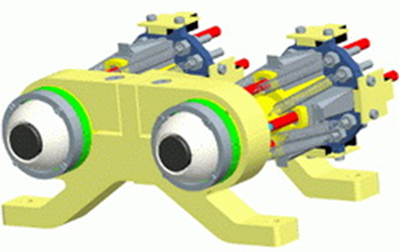

Multiple cameras with laser range finders and other sensors enable a robot to detect objects around it. We use prior knowledge of our surroundings to allow us to see in 3-D. Implementing this information along with algorithms, the robot collects the images seen through two cameras and its computer compares the information to create a 3-D image. Using prior knowledge, we humans automatically see everything in 3-D and by merging prior knowledge with visual information; robots see in the same manner that we do.

Eyeshots, Courtesy of the University of Genoa

To us humans, a red apple, isn't actually red. In point of fact, it doesn’t have a color. Instead, the molecular surface on the apple reflects wavelengths that appear red in your brain. Basically, this is how a robot senses color. Light is reflected from an object and the computer takes this information and compares the wavelengths. As an example, a red apple will reflect more light than a green apple. In reality, this procedure is a lot more complicated, and would take a volume to explain in detail, but you get the idea.

The Naval Research Laboratory developed a new robot, named SAFFiR (Shipboard Autonomous Firefighting Robot), which can handle a fire hose, throw extinguisher grenades, climb ladders, crawl through cramped spaces and see through smoke. Using its visual sensors, SAFFiR is able to detect, comprehend and react to gestures so that the robot can work in concert with humans.

Giving a robot the sense of touch depends on the material to be handled by the robot, and its tactile sensor is dependent upon the material of the object being gripped by the robot, whether it is a rigid or non-rigid material.

A device called Bio Tac® is a product of SynTouch LLC. The company has integrated the device into a variety of robotic hands. The Bio Tac sensor can feel pressure, vibration, textures, heat and cold the same way human fingers do; plus a soft, flexible fluid-filled cover can be worn over a Bio Tac to simulate skin.

The Shadow Robot Company created a hand that allows precise manipulation control, using Bio Tac devices, which is even more touch-sensitive than a human hand. The Shadow Motor Hand is a pneumatic hand that employs 34 tactile sensors; and it can be fitted with a number of touch sensing options to increase its level of interaction with its surroundings.

The Shadow Robot Company created a hand that allows precise manipulation control, using Bio Tac devices, which is even more touch-sensitive than a human hand. The Shadow Motor Hand is a pneumatic hand that employs 34 tactile sensors; and it can be fitted with a number of touch sensing options to increase its level of interaction with its surroundings.

The Shadow Hand was designed to use less force than a human hand thereby avoiding the possibility of the hand applying too much pressure. Tracking on the hand has a better than 1 degree accuracy.

A robot equipped with tactile sensor in its hand can mimic the human fingertip, and it can make decisions about how to explore the outside world by duplicating human approaches. The sensor can also tell where and in which direction forces are applied to the fingertip.

Utilizing the Bio Tac sensor, Jeremy Fischel and Gerald Loeb of the Biomedical Engineering Department at USC, used a specialized test-bed and exposed 117 common materials gathered from fabric, stationery and hardware stores to the sensor. The sensor correctly identified random material 95% of the time after making an average of five exploratory movements.

For a robot to have hearing it must have speech recognition, enabling the robot to receive and interpret words spoken to it, and to understand and carry out spoken commands. The robot’s memory must have a database or vocabulary of words and a prompt way of comparing this data with the input.

Researchers at Honda developed a robot named Hearbo that not only registers sounds, but also knows how to interpret them, using a 3-step paradigm: localization, separation, and recognition. Hearbo can analyze what somebody says in real time. Honda has a system, named HARK (HRI-JP Audition for Robots with Kyoto University), which gives Hearbo a wide listening array and allows for a wider listening range. Using eight microphones, up to seven different sound sources can be separated and recognized at once, something that humans with two ears cannot do. HARK processes audible noise, allowing the software to single out the sounds generated by its 17 motors, and then processes the remaining audio, while applying a sound source localization algorithm to pinpoint the origin of a sound to within one degree of accuracy.

Hearbo can understand and follow a particular voice in a crowded room, and then know how to respond to the voice. It can even listen to a piece of music and understand it in the same way we do. Hearbo can also accurately detect the location of a human calling for help in a disaster situation.

Hearbo is designed to capture all the surrounding sounds, and is able to distinguish between a human issuing commands and a singer on the radio, as it captures all of the sounds around it and determines their position, before converging on a particular source.

Just how far can robots go in our everyday life? The Haohai Robot Restaurant in Harbin, China is staffed entirely by eighteen robots. Upon entering the restaurant, a robot verbally welcomes the customers, which are then led to a table. A robot waiter runs along tracks in the floor serving up dishes cooked by a team of robot chefs in the restaurant.

The food is also served to the proper table from an overhead conveyor belt, and the serving robot removes the food with its extendable arms. While the guests wait for their food, they are serenaded by a singing robot and entertained by an automated dog. What more could we ask for?

A robot with skin and the human abilities of seeing, hearing and touch starts to sound less like a robot, and more like the android, Lieutenant Commander Data in Star Trek. Maybe we will all go where no man has gone before.

For further information:

- http://www.iaria.org/conferences2010/filesACHI10/ACHI2010_RayJarvis.pdf

- http://dspace.library.cornell.edu/bitstream/1813/7139/1/95-1480.pdf

- http://classes.soe.ucsc.edu/cmpe259/Winter05/papers/howard02autonomous.pdf

- http://www.daimi.au.dk/~chili/Formations/journalpaper.pdf

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product