Unlike pure Computer Vision research, Robot Vision must incorporate aspects of robotics into its techniques and algorithms, such as kinematics, reference frame calibration and the robot's ability to physically affect the environment.

Robot Vision vs Computer Vision: What's the Difference?

Alex Owen-Hill | Robotiq

Reprinted with permission from the Robotiq blog:

What's the difference between Robot Vision, Computer Vision, Image Processing, Machine Vision and Pattern Recognition? It can get confusing to know which one is which. We take a look at what all these terms mean and how they relate to robotics. After reading this article, you never need to be confused again!

People sometimes get mixed up when they're talking about robotic vision techniques. They will say that they are using "Computer Vision" or "Image Processing" when, in fact, they mean "Machine Vision." It's a completely understandable mistake. The lines between all of the different terms are sometimes blurred.

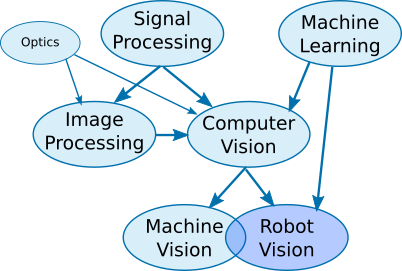

In this article, we break down the "family tree" of Robot Vision and show where it fits within the wider field of Signal Processing.

What is Robot Vision?

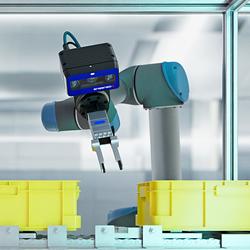

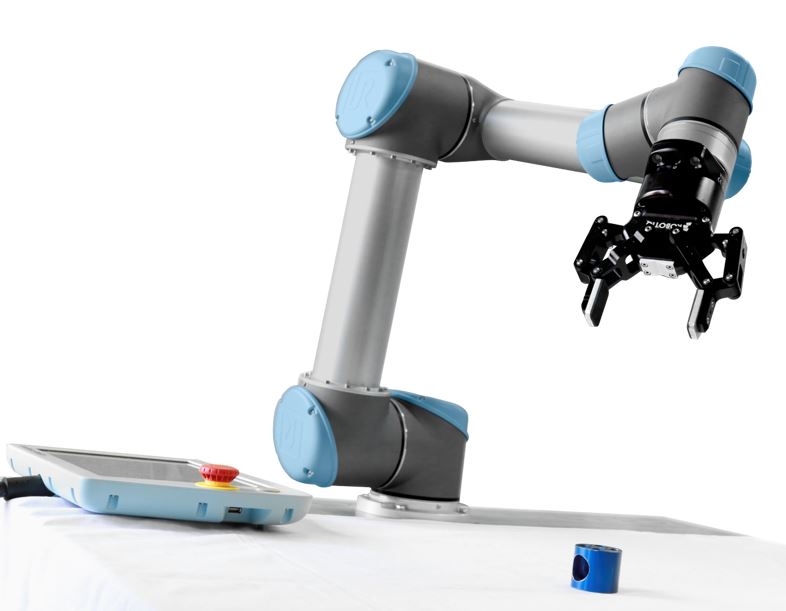

In basic terms, Robot Vision involves using a combination of camera hardware and computer algorithms to allow robots to process visual data from the world. For example, your system could have a 2D camera which detects an object for the robot to pick up. A more complex example might be to use a 3D stereo camera to guide a robot to mount wheels onto a moving vehicle.

Without Robot Vision, your robot is essentially blind. This is not a problem for many robotic tasks, but for some applications Robot Vision is useful or even essential.

Robot Vision's Family Tree

Robot Vision is closely related to Machine Vision, which we'll introduce in a moment. They are both closely related to Computer Vision. If we were talking about a family tree, Computer Vision could be seen as their "parent." However, to understand where they all fit into the world, we have to take a step higher to introduce the "grandparent" - Signal Processing.

Signal Processing

Signal Processing involves processing electronic signals to either clean them up (e.g. removing noise), extract information, prepare them to output to a display or prepare them for further processing. Anything can be a signal, more or less. There are various types of signals which can be processed, e.g. analog electrical signals, digital electronic signals, frequency signals etc. Images are basically just a two (or more) dimensional signal. For Robot Vision, we're interested in the processing of images. So, we're talking about Image Processing, right? Wrong.

Image Processing vs Computer Vision

Computer Vision and Image Processing are like cousins, but they have quite different aims. Image Processing techniques are primarily used to improve the quality of an image, convert it into another format (like a histogram) or otherwise change it for further processing. Computer Vision, on the other hand, is more about extracting information from images to make sense of them. So, you might use Image Processing to convert a color image to grayscale and then use Computer Vision to detect objects within that image. If we look even further up the family tree, we see that both of these domains are heavily influenced by the domain of Physics, specifically Optics.

Pattern Recognition and Machine Learning

So far, so simple. Where it starts to get a little more complex is when we include Pattern Recognition into the family tree, or more broadly Machine Learning. This branch of the family is focused on recognizing patterns in data, which is quite important for many of the more advanced functions required of Robot Vision. For example, to be able to recognize an object from its image, the software must be able to detect if the object it sees is similar to previous objects. Machine Learning, therefore, is another parent of Computer Vision alongside Signal Processing.

However, not all Computer Vision techniques require Machine Learning. You can also use Machine Learning on signals which are not images. In practice, the two domains are often combined like this: Computer Vision detects features and information from an image, which are then used as an input to the Machine Learning algorithms. For example, Computer Vision detects the size and color of parts on a conveyor belt, then Machine Learning decides if those parts are faulty based on its learned knowledge about what a good part should look like.

Machine Vision

Now we get to Machine Vision, and everything changes. This is because Machine Vision is quite different to all the previous terms. It is more about specific applications than it is about techniques. Machine Vision refers to the industrial use of vision for automatic inspection, process control and robot guidance. The rest of the "family" are scientific domains, whereas Machine Vision is an engineering domain.

In some ways, you could think of it as a child of Computer Vision because it uses techniques and algorithms for Computer Vision and Image Processing. But, although it's used to guide robots, it's not exactly the same thing as Robot Vision.

Robot Vision

Finally, we arrive at Robot Vision. If you've been following the article up until now, you will realize that Robot Vision incorporates techniques from all of the previous terms. In many cases, Robot Vision and Machine Vision are used interchangeably. However, there are a few subtle differences. Some Machine Vision applications, such as part inspection, have nothing to do with robotics - the part is merely placed in front of a vision sensor which looks for faults.

Also, Robot Vision is not only an engineering domain. It is a science with its own specific areas of research. Unlike pure Computer Vision research, Robot Vision must incorporate aspects of robotics into its techniques and algorithms, such as kinematics, reference frame calibration and the robot's ability to physically affect the environment. Visual Servoing is a perfect example of a technique which can only be termed Robot Vision, not Computer Vision. It involves controlling the motion of a robot by using the feedback of the robot's position as detected by a vision sensor.

What You Put In vs What You Get Out

One useful way of understanding the differences comes from RSIP Vision. They define some of the domains in terms of their input. So, to finish up this article, here are the basic inputs for each of the domains introduced above.

|

Technique |

Input |

Output |

|

Signal Processing |

Electrical signals |

Electrical signals |

|

Image Processing |

Images |

Images |

|

Computer Vision |

Images |

Information/features |

|

Pattern Recognition/Machine Learning |

Information/features |

Information |

|

Machine Vision |

Images |

Information |

|

Robot Vision |

Images |

Physical Action |

Would you define any of the terms differently? Which areas do you think are most important to your work? Any questions about the different domains? Tell us in the comments below or join the discussion on LinkedIn, Twitter or Facebook.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product