360° Optics Tutorial

Enabling a full object view with just one camera.

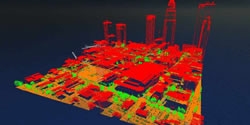

LiDAR 101: A Q&A with a Pictometry Expert

Because LiDAR uses light, the target must be visible, so it is not an all-weather solution. It wont work well in fog or other weather conditions that affect visibility, but if conditions are clear, it can operate during both day and night.

As seen on TV! Neural Enhance

From Alex J. Champandard: As seen on TV! What if you could increase the resolution of your photos using technology from CSI laboratories? Thanks to deep learning and #NeuralEnhance, it's now possible to train a neural network to zoom in to your images at 2x or even 4x. You'll get even better results by increasing the number of neurons or training with a dataset similar to your low resolution image.

The catch? The neural network is hallucinating details based on its training from example images. It's not reconstructing your photo exactly as it would have been if it was HD. That's only possible in Hollywood — but using deep learning as "Creative AI" works and it is just as cool! (github)

A Large Dataset to Train Convolutional Networks for Disparity, Optical Flow, and Scene Flow Estimation

From Computer Vision Freiburg: Recent work has shown that optical flow estimation can be formulated as a supervised learning task and can be successfully solved with convolutional networks. Training of the so-called FlowNet was enabled by a large synthetically generated dataset. The present paper extends the concept of optical flow estimation via convolutional networks to disparity and scene flow estimation. To this end, we propose three synthetic stereo video datasets with sufficient realism, variation, and size to successfully train large networks. Our datasets are the first large-scale datasets to enable training and evaluating scene flow methods. Besides the datasets, we present a convolutional network for real-time disparity estimation that provides state-of-the-art results. By combining a flow and disparity estimation network and training it jointly, we demonstrate the first scene flow estimation with a convolutional network.

his video shows impressions from various parts of our dataset, as well as state-of-the-art realtime disparity estimation results produced by one of our new CNNs... (full paper)

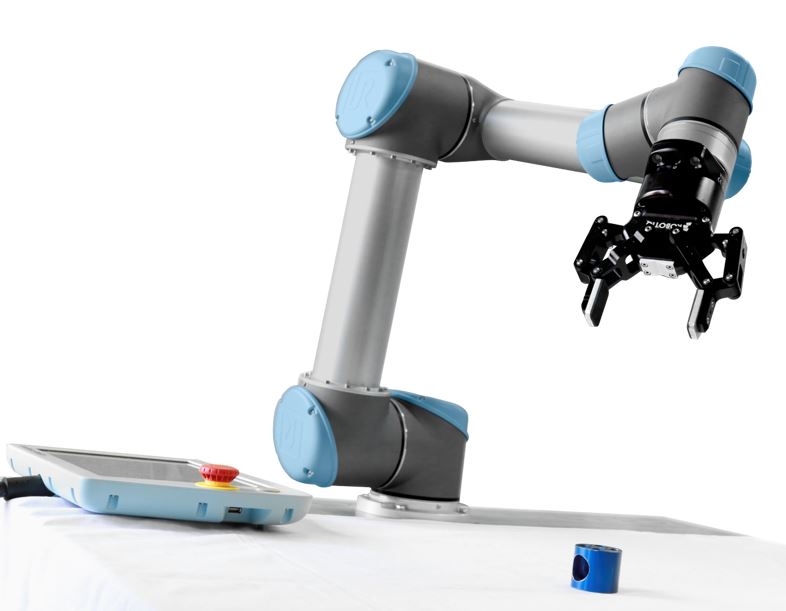

Robot Vision vs Computer Vision: What's the Difference?

Unlike pure Computer Vision research, Robot Vision must incorporate aspects of robotics into its techniques and algorithms, such as kinematics, reference frame calibration and the robot's ability to physically affect the environment.

Mapping And Navigating With An Intel RealSense R200 Camera

In our latest demonstration, Archie provides an overview of the R200 sensor and shows how it can integrate seamlessly with ROS and a TurtleBot to accurately map and navigate an environment.

Artistic Style Transfer for Videos

From Manuel Ruder, Alexey Dosovitskiy, Thomas Brox of the University of Freiburg:

In the past, manually re-drawing an image in a certain artistic style required a professional artist and a long time. Doing this for a video sequence single-handed was beyond imagination. Nowadays computers provide new possibilities. We present an approach that transfers the style from one image (for example, a painting) to a whole video sequence. We make use of recent advances in style transfer in still images and propose new initializations and loss functions applicable to videos. This allows us to generate consistent and stable stylized video sequences, even in cases with large motion and strong occlusion. We show that the proposed method clearly outperforms simpler baselines both qualitatively and quantitatively... (pdf paper)

Efficient 3D Object Segmentation from Densely Sampled Light Fields with Applications to 3D Reconstruction

From Kaan Yücer, Alexander Sorkine-Hornung, Oliver Wang, Olga Sorkine-Hornung:

Precise object segmentation in image data is a fundamental problem with various applications, including 3D object reconstruction. We present an efficient algorithm to automatically segment a static foreground object from highly cluttered background in light fields. A key insight and contribution of our paper is that a significant increase of the available input data can enable the design of novel, highly efficient approaches. In particular, the central idea of our method is to exploit high spatio-angular sampling on the order of thousands of input frames, e.g. captured as a hand-held video, such that new structures are revealed due to the increased coherence in the data. We first show how purely local gradient information contained in slices of such a dense light field can be combined with information about the camera trajectory to make efficient estimates of the foreground and background. These estimates are then propagated to textureless regions using edge-aware filtering in the epipolar volume. Finally, we enforce global consistency in a gathering step to derive a precise object segmentation both in 2D and 3D space, which captures fine geometric details even in very cluttered scenes. The design of each of these steps is motivated by efficiency and scalability, allowing us to handle large, real-world video datasets on a standard desktop computer... (paper)

Image Processing 101

Sher Minn Chong wrote a good introductory to image processing in Python:

In this article, I will go through some basic building blocks of image processing, and share some code and approaches to basic how-tos. All code written is in Python and uses OpenCV, a powerful image processing and computer vision library...

... When we’re trying to gather information about an image, we’ll first need to break it up into the features we are interested in. This is called segmentation. Image segmentation is the process representing an image in segments to make it more meaningful for easier to analyze3.

Thresholding

One of the simplest ways of segmenting an image isthresholding. The basic idea of thresholding is to replace each pixel in an image with a white pixel if a channel value of that pixel exceeds a certain threshold... (full tutorial) (iPython Notebook)

Low Cost Vision System

The vision system sends coordinates to the robot so it can be gripped by the robot regardless of position.

Lumipen 2: Robust Tracking for Dynamic Projection Mapping

From University of Tokyo:

In our laboratory, the Lumipen system has been proposed to solve the time-geometric inconsistency caused by the delay when using dynamic objects. It consists of a projector and a high-speed optical axis controller with high-speed vision and mirrors, called Saccade Mirror (1ms Auto Pan-Tilt technology). Lumipen can provide projected images that are fixed on dynamic objects such as bouncing balls. However, the robustness of the tracking is sensitive to the simultaneous projection on the object, as well as the environmental lighting... (full article)

Frankenimage

From David Stolarsky:

The goal of Frankenimage is to reconstruct input (target) images with pieces of images from a large image database (the database images).

Frankenimage is deliberately in contrast with traditional photomosaics. In traditional photomosaics, more often than not, the database images that are composed together to make up the target image are so small as to be little more than glorified pixels. Frankenimage aims instead for component database images to be as large as possible in the final composition, taking advantage of structure in each database image, instead of just its average color. In this way, database images retain their own meaning, allowing for real artistic juxtaposition to be achieved between target and component images... (full description and pseudo code)

Records 1 to 12 of 12

Featured Product

Robotmaster 2024

Program multi-robot cells and automatically solve robotic errors with ease. Hypertherm Associates announces a new version to its robotic programming software. Robotmaster 2024 addresses key market trends including the support for programming multiple robots in a single work cell and the demand for automatic trajectory optimization and robotic error correction.

Robotics and Automation - Featured Company

MVTec LLC

MVTec is a leading international manufacturer of software for machine vision used in all demanding areas of imaging like the semi-conductor industry, inspection, optical quality control, metrology, medicine or surveillance. In particular, software by MVTec enables new automation solutions in settings of the Industrial Internet of Things. MVTec is the developer and vendor of the general purpose machine vision software products HALCON and MERLIC.