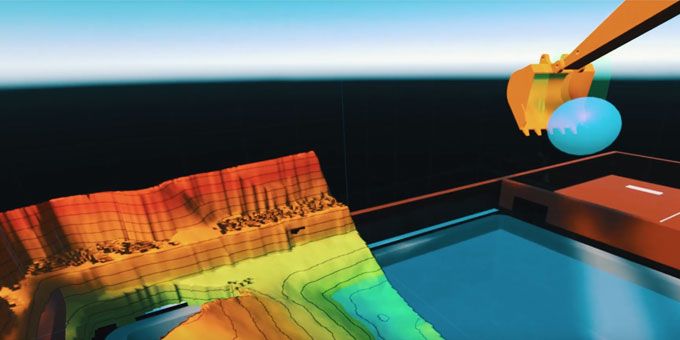

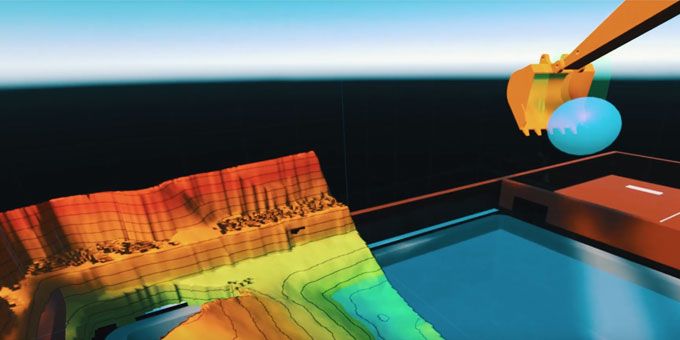

We are developing a general purpose, robotic control software platform that allows the user to use semantic commands, in a simulated VR environment.

Robot Control with Semantic Commands

Robot Control with Semantic Commands

Pavel Savkin | SE4 Robotics

What’re you doing?

We are developing a general purpose, robotic control software platform that allows the user to use semantic commands, in a simulated VR environment. This allows the users to interact with the world through a robot, in much the same way we interact with one another.

Why is solving issues with latency so crucial?

No matter how ‘fast’ or ‘strong’ your signal, it is impossible for any signal to surpass the speed limit of the universe - i.e. the speed of light. Even in the best conditions, at light speed, signals travelling to the moon take almost 3 seconds to make a return trip. This makes force-feedback style manual remote control of devices very difficult, inefficient, and in many cases, dangerous. Just imagine driving your car down the highway and needing to wait 3 seconds before you see the car ahead of you stop, and another 3 seconds after applying the brakes for them to turn on! At highway speed, you’re gonna need to hope you’re around 300 meters (984 feet) away from that car, or you’re going to want to drive really slow! If we want to control the robotic pioneers of the future, that will build the crucial infrastructure needed for human habitation in space, the issue of latency must be solved!

How are you doing it?

By giving the robot a series of semantic instructions in a VR simulation, we allow the operator to decide how they wish to interact with the robot’s environment, and are provided with a simulated feedback response as to the likely result. The commands queued up in simulation are then sent to the robot when the operator is satisfied with the simulated outcome of the commands given. The robot on the ground uses computer vision and a limited AI to interpret the commands, and apply them to the designated environment, deferring back to the operator if it is unable to complete a command safely, or some other unexpected event prevents it from doing so.

As the operator is running the simulation locally, they will not directly experience latency.

What’s SE4’s real edge here?

Bypassing latency is not the only strength of our software.

By allowing an operator to ‘queue up’ work, there is a SIGNIFICANT improvement to efficiency when compared with traditional 1:1 teleoperation. Depending upon the application, an operator can stack hours, or, even weeks of robotic operation into several seconds of operator interaction.

For example - we can tell an excavator to dig a huge volume of soil, which may take 12 hours or more to dig, within 30 seconds of instruction using our platform.

How robust is your stack compared with what’s available out there now?

Fortunately for us, to the best of our knowledge, nobody else is taking our approach, and we have 3 patents pending to keep it that way!

What makes you think you can compete with the bigger players?

Having a unique and revolutionary approach, combined with an extremely talented and driven team, as well as our ties to large industry partners who have stated their interest in collaboration.

Can you work with any robot / sensor setup?

Assuming the robot setup we are provided allows for direct control and information feedback, as well as sensors of correct ability and sufficient resolution, then yes.

Your mission is to take us out amongst the stars, what makes you think you can enable this?

We believe that for humanity to live offworld, the infrastructure necessary must be setup ahead of our arrival - be it the moon, mars or anywhere else in space. This will require us to send robotic pioneers ahead to build homes, labs, factories, mines and farms. In order for these complex structures to be built offworld, a new type of robotic interface that offers hitherto unseen adaptability and flexibility must be realised. We are the missing puzzle piece, and are excited to be a part in humanity’s journey into the space-based industrial revolution.

Where have traditional robotic control systems gone wrong thus far?

We don’t think they have ‘gone wrong’, so much as they have thus far only been used for (and capable) of repetitive tasks in a controlled environment. To this extent, they are very good at what they have been used for to this point (i.e. mainly in mass production manufacturing settings). However, for the challenges ahead in space, we need a system that adapts to its environment, rather than one that depends upon a known, static one. This requires us to rethink not only how we think when we use a robot, but also how the robot thinks when in operation.

How are you the first to do this?

SE4 is fortunate to not only have talented robotics engineers (as a traditional robotics company would), but also a battle-tested crew of VR software engineers. The majority of VR software engineers, as well as VR software in general, is in the gaming sector, so having a mixture of robotics and VR specialists in the same room gives us a unique edge. Our AI, machine learning, and Computer Vision specialists combine to give us a very holistic software approach.

How do you plan to make money with this?

In the long term, we intend to make money in the currently non existent ‘space construction’ industry, but in the short-term, we intend to not only make money, but train ourselves for space in the construction industry here on earth.

In Japan for example, the average excavator operator is over 55 years old, and with a declining population paired with a lack of interest amongst the younger generation, this is only set to worsen.

Although Japan is amongst the most extreme examples, all other developed countries are also experiencing skill shortages in construction to varying extents. This is despite the fact that the construction industry worldwide continues to see constant growth - with the industry predicted to exceed 15.5 trillion USD by 2030!

Our software not only greatly lowers the skill level required for operation of many different types of heavy machinery (excavators, back-hoes, drilling rigs, cranes, dump trucks etc), it also allows one operator to control many machines at once anywhere on earth. An operator in Ohio could control a Crane in Connecticut, a Back-Hoe in Beijing, and an Excavator in Estonia, all at the same time! Given the scale of even simple excavation demands worldwide, paired with the obvious cost savings this would allow for, it is needless to say that there is a huge financial potential.

About Pavel Savkin

About Pavel Savkin

Pavel Savkin is the Chief Technology Officer at SE4. As CTO, Pavel Combines VR, robotics, computer vision and AI in order to build on his vision of a robotics interface not affected by latency. Born in Russia and raised in Japan from the age of six, Pavel graduated from Waseda University in Japan with a Masters of Applied Physics in 2017. He has continued his work as Waseda post-graduation as a Research Fellow.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Featured Product