Anyone who has ever tried out a fairground grab machine can confirm it: Manual control of grab arms is anything but trivial. As harmless as it is to fail when trying to grab a stuffed bunny, failed attempts can be as dramatic when handling radioactive waste.

Robot-assisted System with Ensenso 3D Camera For Safe Handling of Nuclear Waste

Robot-assisted System with Ensenso 3D Camera For Safe Handling of Nuclear Waste

Case Study from | IDS Imaging Development Systems

The decommissioning of nuclear facilities poses major challenges for operators. Whether decommissioning or safe containment, the amount of nuclear waste to be disposed of is growing at an overwhelming rate worldwide. Automation is increasingly required to handle nuclear waste, but the nuclear industry is reluctant of fully autonomous robotic control methods for safety reasons, and remote-controlled industrial robots are preferred in hazardous environments. However, such complex tasks as remote-controlled gripping or cutting of unknown objects with the help of joysticks and video surveillance cameras are difficult to control and sometimes even impossible.

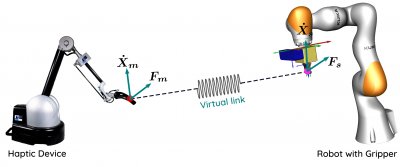

To simplify this process, the National Centre for Nuclear Robotics led by Extreme Robotics Lab at the University of Birmingham in the UK is researching automated handling options for nuclear waste. The robot assistance system developed there enables "shared" control to perform complex manipulation tasks by means of haptic feedback and vision information provided by Ensenso 3D camera. The operator, who is always present in the loop can retain control over the robot's automated actions, in case of system failures.

Application

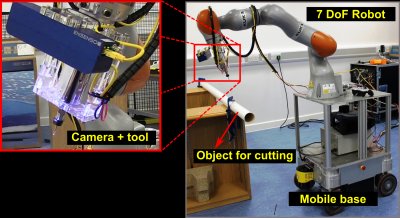

Anyone who has ever tried out a fairground grab machine can confirm it: Manual control of grab arms is anything but trivial. As harmless as it is to fail when trying to grab a stuffed bunny, failed attempts can be as dramatic when handling radioactive waste. To avoid damage with serious consequences for humans and the environment, the robot must be able to detect the radioactive objects in the scene extremely accurately and act with precision. The operator literally has it in his hands, it is up to him to identify the correct gripping positions. At the same time, he must correctly assess the inverse kinematics (backward transformation) and correctly determine the joint angles of the robot's arm elements in order to position it correctly and avoid collisions. The assistance system developed by the British researchers simplifies and speeds up this task immensely: with a standard industrial robot equipped with a parallel jaw gripper and an Ensenso N35 3D camera.

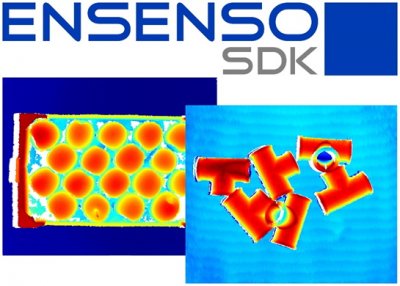

The system autonomously scans unknown waste objects and creates a 3D model of them in the form of a point cloud. This is extremely precise because Ensenso 3D cameras work according to the principle of spatial vision (stereo vision), which is modelled on human vision. Two cameras view the object from different positions. Although the image content of both camera images appear identical, they show differences in the position of the objects viewed. Since the distance and viewing angle of the cameras as well as the focal length of the optics are known, the Ensenso software can determine the 3D coordination of the object point for each individual image pixel. In this case, the scene is captured using different scanning positions of the camera and combined to get a complete 3D surface from all viewing angles. Ensenso's calibration routines help transform the individual point clouds into a global coordinate system, which improves the complete virtual image. The resulting point cloud thus contains all the spatial object information needed to communicate the correct gripping or cutting position to the robot.

Figure Extreme Robotics Lab’s 3D vision-guided semi-autonomous robotic cutting of metallic object in radioactive environment.

With the help of the software, the Enseno 3D camera takes over the perception and evaluation of the depth information for the operator, whose cognitive load is considerably reduced as a result. The assistance system combines the haptic features of the object to be gripped with a special gripping algorithm.

"The scene cloud is used by our system to automatically generate several stable gripping positions. Since the point clouds captured by the 3D camera are high-resolution and dense, it is possible to generate very precise gripping positions for each object in the scene. Based on this, our "hypothesis ranking algorithm" determines the next object to pick up, based on the robot's current position," explains Dr Naresh Marturi, Senior Research Scientist at the National Centre for Nuclear Robotics.

(The principle is similar to that of the skill game Mikado, where one stick must be taken away at a time without moving any other sticks).

The determined path guidance enables the robot to navigate smoothly and evenly along a desired path to the target gripping position. Like a navigation system, the system supports the operator in guiding the robot arm to the safe grasp, if necessary, also past other unknown and dangerous objects. The system calculates a safe corridor for this and helps the operator not to leave the corridor through haptic feedback.

The system maps the operator's natural hand movements exactly and reliably in real time to the corresponding movements of the robot. The operator thus always retains manual control and is able to take over in the event of component failure. He can simply turnoff AI and move back to human intelligence by turning off the “force feedback mode”. In accordance with the principle of shared control between man and machine, the system thus remains under control at all times - essential in an environment with the highest level of danger.

Camera

"For all our autonomous grasp planning, remote control and visual object tracking tasks, we use Ensenso N35 model 3D cameras with blue LEDs (465nm) mounted on the end effector of the robots along with other tools," says Dr Naresh Marturi. Most of the systems from the Extreme Robotic Lab have so far been equipped with a single 3D camera. "However, recently to speed-up the process of 3D model building we have upgraded our systems to use additional three scene mounted Ensenso 3D cameras along with the one on-board the robot."

The Ensenso N series is predestined for this task. It was specially designed for use in harsh environmental conditions. Thanks to its compact design, the N series is equally suitable for the space-saving stationary or mobile use on a robot arm for the 3D detection of moving and static objects. Even in difficult lighting conditions, the integrated projector projects a high-contrast texture onto the object to be imaged by means of a pattern mask with a random dot pattern, thus supplementing the structures that are not or only weakly present on its surface. The aluminum housing of the N30 models ensures optimal heat dissipation of the electronic components and thus stable light output even under extreme ambient conditions. This ensures the consistently high quality and robustness of the 3D data. Even in difficult lighting conditions, the integrated projector projects a high-contrast texture onto the object to be imaged by means of a pattern mask with a random dot pattern, thus supplementing the structures that are not or only weakly present on its surface.

Cameras of the Ensenso N camera family are easy to set up and operate via the Ensenso SDK. It offers GPU-based image processing for even faster 3D data processing and enables the output of a single 3D point cloud of all cameras used in multi-camera operation, which is required in this case, as well as the live composition of the 3D point clouds from multiple viewing directions. For the assistance system, the researchers have developed their own software in C++ to process the 3D point clouds captured by the cameras.

"Our software uses the Ensenso SDK (multi-threaded) and its calibration routines to overlay texture on the high-resolution point clouds and then transform these textured point clouds into a world coordinate system," explains Dr Naresh Marturi. “Ensenso SDK is fairly easy to integrate with our C++ software. It offers a variety of straightforward functions and methods to capture and handle point clouds as well as camera images. Moreover, with CUDA support, the SDK routines enable us to register multiple high-resolution point clouds to generate high-quality scene clouds in global frame. This is very much important for us, especially to generate precise grasp hypothesis.”

Figure: Dr Naresh Marturi, Senior Research Scientist in Robotics (left), Maxime Adjigble, Robotics Research Engineer (right)

Main advantages of the system

-Operators no need to worry about scene depth or how to reach the object or where to grasp it. The system can figure out everything in the background and helps the operator to move exactly to the place where the robot can best grasp the object.

-With haptic feedback, operators can feel the robot in their hand even when the robot is not present in front of them.

-By combining haptics and grasp planning, operators can move the objects in a remote scene very easily and very quickly having very low cognitive load.

This saves time and money, avoids errors and increases safety.

Outlook

Researchers at the Extreme Robotic Lab in Birmingham are currently developing an extension of the method to allow the use of a multi-fingered hand instead of a parallel jaw gripper. This should increase flexibility and reliability when gripping complex objects. In future, the operator will also be able to feel the forces to which the fingers of the remote-controlled robot are exposed when gripping an object. Fully autonomous gripping methods are also being developed, in which the robot arm is controlled by an AI and guided by an automatic vision system. The team is also working on visualization tools to improve human-robot collaboration to control remote robots via a "shared control" system.

This is a promising approach for the safety and health of all of us: the handling of hazardous objects such as nuclear waste is ultimately a matter of concern to us all. By reliably capturing the relevant object information, Ensenso 3D cameras are making an important contribution to this globally prevalent task of increasing urgency.

Client / University

The Extreme Robotics Lab, University of Birmingham, UK is market leading in many of the components that are needed for the increasing efforts to roboticise nuclear operations.

https://www.birmingham.ac.uk/research/activity/metallurgy-materials/robotics/our-technologies.aspx

About IDS Imaging Development Systems GmbH:

The industrial camera manufacturer IDS Imaging Development Systems GmbH develops high-performance, easy-to-use USB, GigE and 3D cameras with a wide spectrum of sensors and variants. The almost unlimited range of applications covers multiple non-industrial and industrial sectors in the field of equipment, plant and mechanical engineering. In addition to the successful CMOS cameras, the company expands its portfolio with vision app-based, intelligent cameras. The novel image processing platform IDS NXT is freely programmable and extremely versatile.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

IDS Imaging Development Systems Inc.

World-class image processing and industrial cameras "Made in Germany". Machine vision systems from IDS are powerful and easy to use. IDS is a leading provider of area scan cameras with USB and GigE interfaces, 3D industrial cameras and industrial cameras with artificial intelligence. Industrial monitoring cameras with streaming and event recording complete the portfolio. One of IDS's key strengths is customized solutions. An experienced project team of hardware and software developers makes almost anything technically possible to meet individual specifications - from custom design and PCB electronics to specific connector configurations. Whether in an industrial or non-industrial setting: IDS cameras and sensors assist companies worldwide in optimizing processes, ensuring quality, driving research, conserving raw materials, and serving people. They provide reliability, efficiency and flexibility for your application.

Other Articles

High-precision process monitoring and error detection in additive manufacturing

Inspection of critical infrastructure using intelligent drones

Multi-camera system with AI and seamless traceability leaves no chance for product defects

More about IDS Imaging Development Systems Inc.

Featured Product