The traditional vision architecture is changing, with an evolution from cameras and sensors to networked and smart-enabled, compact embedded devices with the processing power required for real-time analysis.

Evolution of Embedded Vision Technologies for Robotics

Evolution of Embedded Vision Technologies for Robotics

Jonathan Hou, Chief Technical Officer | Pleora Technologies Inc.

Tell us about Pleora and your role with the company.

Pleora is headquartered in Ottawa, Canada and we’ve been a global leader in machine vision and sensor networking for just shy of two decades. Our first products solved real-time imaging challenges for industrial automation applications. As a leader we helped to co-found and contribute to the GigE Vision, USB3 Vision, and GenICam standards. Over recent years, our focus has expanded towards sensor networking, including embedded hardware and software technologies for robotics applications.

Pleora is headquartered in Ottawa, Canada and we’ve been a global leader in machine vision and sensor networking for just shy of two decades. Our first products solved real-time imaging challenges for industrial automation applications. As a leader we helped to co-found and contribute to the GigE Vision, USB3 Vision, and GenICam standards. Over recent years, our focus has expanded towards sensor networking, including embedded hardware and software technologies for robotics applications.

I am Jonathan Hou, the Chief Technology Officer with Pleora, and I am responsible for leading our R&D team in developing and delivering innovative and reliable real-time networked sensor solutions.

How is embedded vision being used in robotics?

Embedded vision is bringing new processing power and expanded data sources to robotics applications. One key area of advancement in this space is smart imaging devices and edge processing.

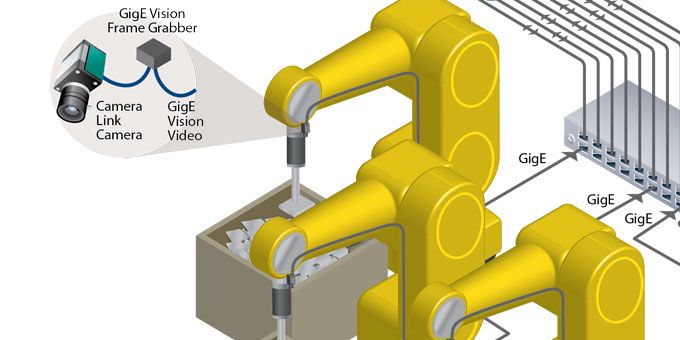

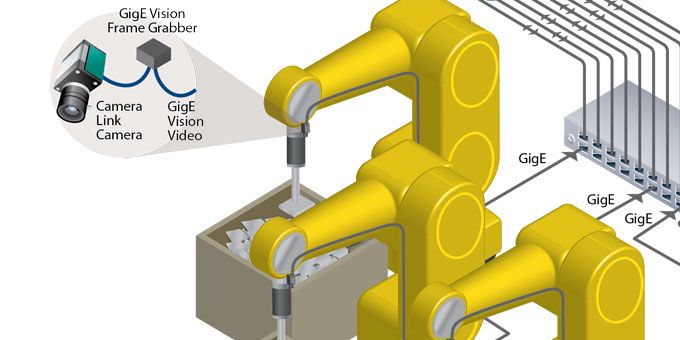

Traditionally, a camera or sensor on a robot would transmit data back to a central processor for decision-making. Bandwidth poses a challenge with this approach, in particular as you look ahead to new applications integrating numerous image and data sources. Component costs, size, weight, and power are also significant design barriers in robotics applications.

Currently, we are seeing an evolution towards smart devices, which bypass a traditional camera in favor of a sensor and compact processing board. A smart device enables edge computing -- which, as it sounds, is decision-making right at the sensor level. The smart devices receive and process data, make a decision, then send the data to other devices, or to local or cloud-based processing and analysis. Local decision-making significantly reduces the amount of data that needs to be transmitted back to a centralized processor; reducing bandwidth and latency demands while reserving the centralized CPU power for more complex analysis tasks. The compact smart devices also allow intelligence to be located at various points within a robotics system, providing scalability through a distributed architecture.

.png)

Smart devices integrating a sensor and processing board are a compact, low-cost way to bring edge processing to robotics applications.

As robotics changes what new demands are you seeing for embedded vision technology?

The traditional vision architecture is changing, with an evolution from cameras and sensors to networked and smart-enabled, compact embedded devices with the processing power required for real-time analysis. This is especially applicable in robotics, where repeated and automated processes -- such as edge detection in a pick and place system -- are ideal for embedded processing.

One significant change is the amount of data now being generated, and more importantly how this data can be used. Previously production data was typically limited to a facility. Now we’re seeing an evolution towards cloud-based data analysis, where a wider data set from a number of global facilities can be potentially used to improve inspection processes. Proving this data is a first step towards machine learning, AI, and eventually deep learning for robotics applications that leverage a deeper set of information to improve processing and analysis.

How is Pleora working to meet these new challenges?

We see the network of sensors evolve within robotics as a network of “smart devices” connected through the network, in constant communication passing processed data to enable “smarter” robotics applications.

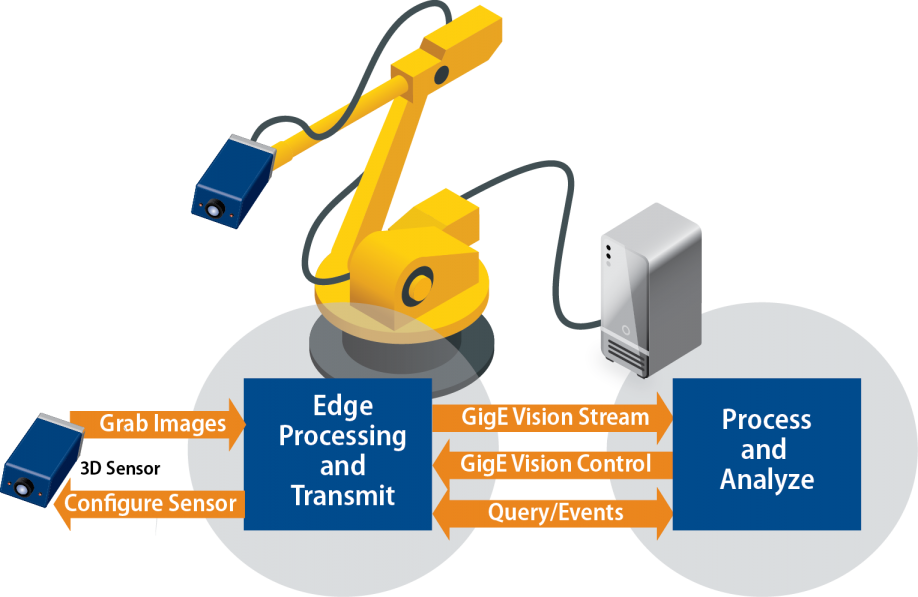

As industrial automation systems evolve towards a networked environment, we see strong opportunities for our hardware and software technologies. Where we traditionally provided an external frame grabber to convert an image feed into GigE Vision or USB3 Vision, today that is a “smart device” that analyzes data and makes a decision before passing information onto the next device for further processing. Software will also play a key role in robotics, where cost and size are key requirements. One often overlooked factor as we look ahead to a networked manufacturing floor is ensuring all of these devices speak a common language. For example, we’ve developed software techniques that convert any image or data sensor into a GigE Vision device. This enables compact, low-cost, smart devices that can seamlessly communicate with each other and back to local or cloud processing. One example of this is in 3D inspection, such as in the automotive market where a sensor on a robotic arm is used to identify surface defects and discontinuities during manufacturing. Today, designers want to convert the image feeds from these sensors into GigE Vision so they can use traditional machine vision processing for analysis. Looking ahead, there’s an obvious value in being able to integrate inspection systems so they can share data and analysis.

These 3D sensors are compact, low power and often part of a mobile inspection system, meaning there’s no room for additional hardware such as a frame grabber. With a software approach, these devices can appear as “virtual GigE sensors” to create a seamlessly integrated network.

The growing emphasis on data will also impact robotics system design. Image and data sensors will still play an important role in collecting the vast amounts of information required to support cloud-based analysis. The need to transport large amounts of potentially sensitive, high-bandwidth sensor data to the cloud to help drive technology development in new areas, such as lossless compression, encryption, security, higher bandwidth, and wireless sensor interfaces.

How do you see the cloud playing a role to advance robotics?

The cloud -- and access to a wider data set -- will play an important role in bringing machine learning and AI to robotics. Instead of relying on rules-based programming, robots can be trained to make decisions using algorithms extracted from the collected data. Evolving from purpose-built inspection systems, we could see “learning systems” that adapt and find new types of defects or perform new functions based on access to a wider data set.

For the robotics industry, inexpensive cloud computing means algorithms that were once computationally too expensive due to dedicated infrastructure requirements are now affordable. For applications such as object recognition, detection and classification, the “learning” portion of the process that once required vast computing resources can now happen in the cloud versus via a dedicated, owned and expensive infrastructure. The processing power required for imaging systems to accurately and repeatedly simulate human understanding, learn new processes, identify and even correct flaws, is now within reach for any system designer.

Thanks to the cloud, rather than “owning” the image processing capabilities, end-users can access data shared across millions of systems and devices to help support continual process and production improvements.

.png)

Cloud-based processing enables robotics applications to access a wider data set and help lead the way towards machine learning and artificial intelligence for imaging applications.

What about machine learning? How can advances here help to move robotics forward?

Looking ahead to machine learning and AI, the natural progression will be developing a process to utilize the cloud to its full advantage. Ideally robotics will have unlimited remote access to real-time data that can be used to continually refine intelligence. Beyond identifying objects, a robotic system could program itself to predict and understand patterns, know what its next action should be, and execute it.

The combination of network-ready and smart-enabled imaging sources in conjunction with constantly evolving and improving algorithms will move robotics towards the beginning phases of Industry 4.0 and the Internet of Things.

Using software, robotics designers can convert a sensor into a virtual GigE Vision device that can seamlessly communicate with other devices as well as local and cloud-based processing.

What are some key challenges with successful implementation of the cloud and advanced machine learning in robotics?

The concept of shared data is fundamentally new for the vision industry. With a scalable cloud-based approach to learning from new data sets, processing algorithms can be continually updated and improved to drive efficiency.

As part of the evolution to cloud processing and advanced machine learning, we’ll recognize that data ownership and analytics services are of increasing importance. The real value is a large data set from a global source of events that can help quickly train the computer model to identify objects, defects and flaws, as well as support a migration toward self-learning robotics systems.

Where we locate intelligence in a vision system will also change. Previously, robotics system designers had to transport high-bandwidth, low-latency video from sensor sources to a centralized PC for processing and analysis. Today, increasingly sophisticated processing can happen very close to or at the sensor itself, in the form of a smart embedded device.

One commonly missed area when looking at technology development is the end-user. Even as we consider machine learning and AI for robotics, there is often a human operator involved in the process. We’ve made a concentrated effort in our product development to always consider the end-user -- whether that’s a system integrator or technician on an assembly line -- to ensure we provide a straightforward solution that solves a real user problem that doesn’t add complexity to their daily tasks.

Look down the road 5 years, what advances do you see in embedded vision and their use in robotics?

Sensor and embedded technology will continue to get smaller, less expensive and more powerful -- all key advantages for the robotics industry. We’re already recognizing the benefits of edge processing for basic tasks in robotics applications, and that will continue to expand with the introduction of more powerful embedded technologies.

As sensor technology becomes more accessible, one of the promises of Industry 4.0 is the ability to improve inspection with 3D, hyperspectral, and IR capabilities. “High definition inspection” will leverage different sensors, edge processing, and wireless transmission to speed inspection, improve quality, and increase automation as part of an Industry 4.0 solution. However, each sensor type has its own interface and data format, meaning designers today can’t easily create “mix and match” systems to benefit from advanced sensor capabilities. Novel software techniques will be required to fuse traditional video images with 3D, hyperspectral, and IR data in order to create an augmented view of a robotics process.

The content & opinions in this article are the author’s and do not necessarily represent the views of RoboticsTomorrow

Comments (0)

This post does not have any comments. Be the first to leave a comment below.

Featured Product