Advancing Machine Design with the Power of Collaboration and AI

Boston Dynamics Expands Collaboration with NVIDIA to Accelerate AI Capabilities in Humanoid Robots

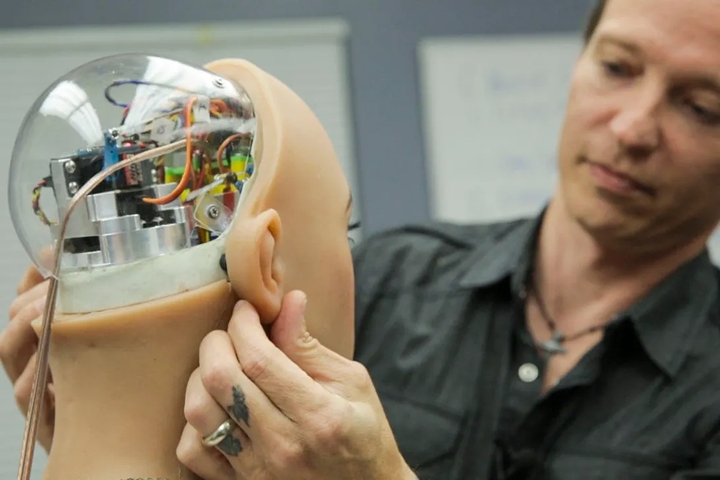

'Eyes, hands, brains and mobility' will define robotics beyond 2025

Trend 2025: Energy requirements often depend on the size of the AI model

Exploring the Future of Supply Chain in 2025

OSARO CEO Derik Pridmore's 2025 Robotics Predictions: AI-Powered Solutions and Practical Automation Take Center Stage

AI Route Optimization Saves Money, Cuts Fuel Consumption and Provides Faster Delivery Times

Beyond the Beaker: Robots and AI Take Center Stage in Modern Chemistry

The Battle of Price and Progress: Making AI Affordable for the Smaller Businesses

Carnegie Robotics Unveils Cutting-Edge Body-Worn Compute System for Military Use

Can Predictive AI Lead Robotics Education to Its Next Evolution?

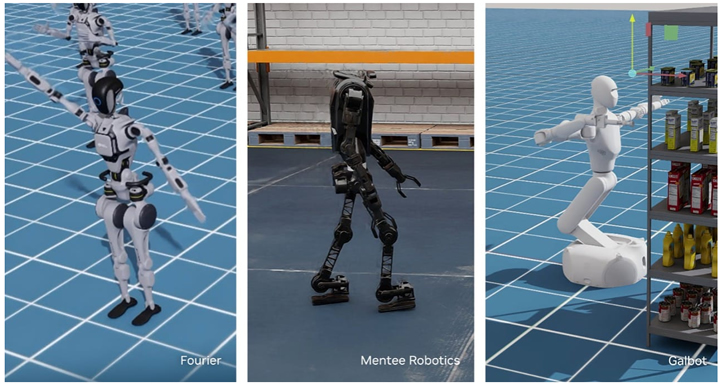

NVIDIA Advances Robot Learning and Humanoid Development With New AI and Simulation Tools

AI Capabilities in Humanoid Robots

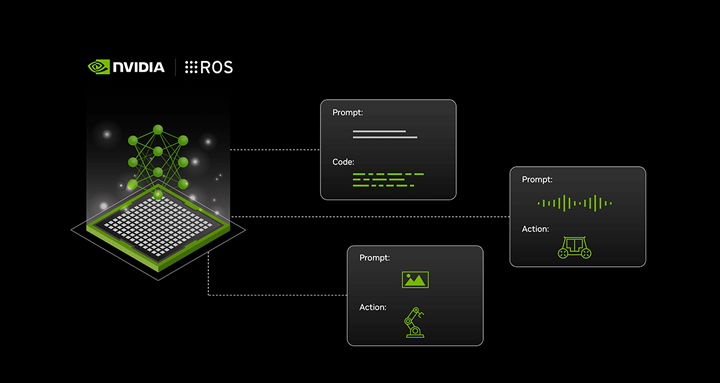

NVIDIA Brings Generative AI Tools, Simulation and Perception Workflows to ROS Developer Ecosystem

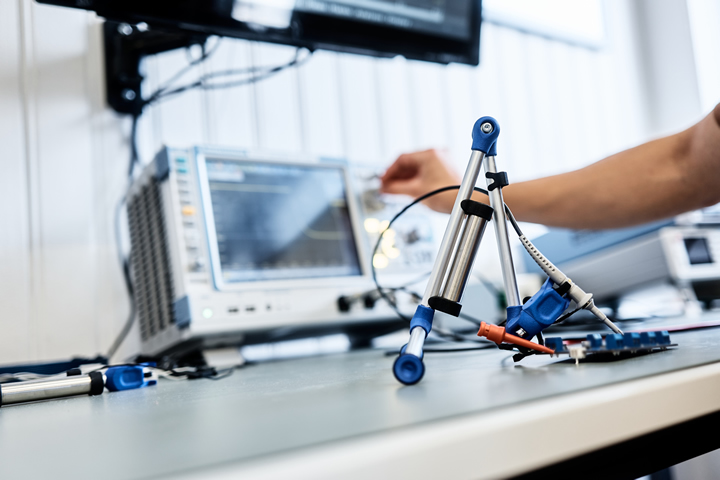

Reliable Industrial Robots with AI - Enhancing Fail-Safe Operations with Predictive Maintenance

Records 16 to 30 of 164

First | Previous | Next | Last

Featured Product

PI USA - Gantry Stages for Laser Machining and Additive Manufacturing

Robotics and Automation - Featured Company

.jpg)

.jpg)